Catch up on all the best of CX circle London

Step into the future of human digital experiences with VICE, Lovehoney, Sky and more leading brands.

Building a great customer experience (CX) is key to digital success. Unfortunately, customer expectations don’t sit still, so what constitutes ‘great’ is a moving target. And there’s only one way to ensure you’re hitting that moving target: test, test, and test again. (Then repeat.)

But what elements of your digital CX should you be testing? Well, we’ve got some ideas for you, courtesy of a panel session that took place at our recent CX circle event in London, led by AB Tasty’s Devon Boyd.

Devon sat down with eCommerce experts from skincare brand Clarins, chocolate manufacturer Hotel Chocolat and travel company On the Beach to find out their biggest challenges around CX—and how A/B testing is helping them overcome these hurdles to deliver a great CX for their customers.

The session—which you can now watch in full on-demand—included seven fantastic A/B testing examples, which we’ve used to compile a list of five top tips to help you A/B test your way to an optimized digital experience for your customers.

Catch up on all the best of CX circle London Step into the future of human digital experiences with VICE, Lovehoney, Sky and more leading brands.

But before we start, let’s remind ourselves why testing is so important and will be more important than ever as we head into 2023…

Creating great digital CX hinges on understanding what your customers want and need from you. You need to discover what engages (and delights) them, what disengages (and repels) them—and innovate your online experience to meet their needs.

Whether you’re redesigning your entire website or app (as Hotel Chocolat has) or launching new pages or features, you need to understand how your audience feels about it. Every innovation should be considered an experiment—and you need to test to collect evidence on how those innovations are received.

With recent economic turmoil creating a serious crisis in consumer confidence, it’s arguably never been more important to take your customers’ temperature. Many of your customers will be feeling the pinch right now, and so will be acutely sensitive to the experience they’re being served. Putting a foot wrong at a time like this could seriously damage your brand (and your bottom line!).

But by the same virtue, getting your digital experience right and meeting customers where they are could be more powerful than ever—and A/B testing is a great tool for doing exactly that. Of course there is a lot of AB testing tools for that.

So without further ado, here are seven A/B test examples to inspire you—plus tips for testing excellence…

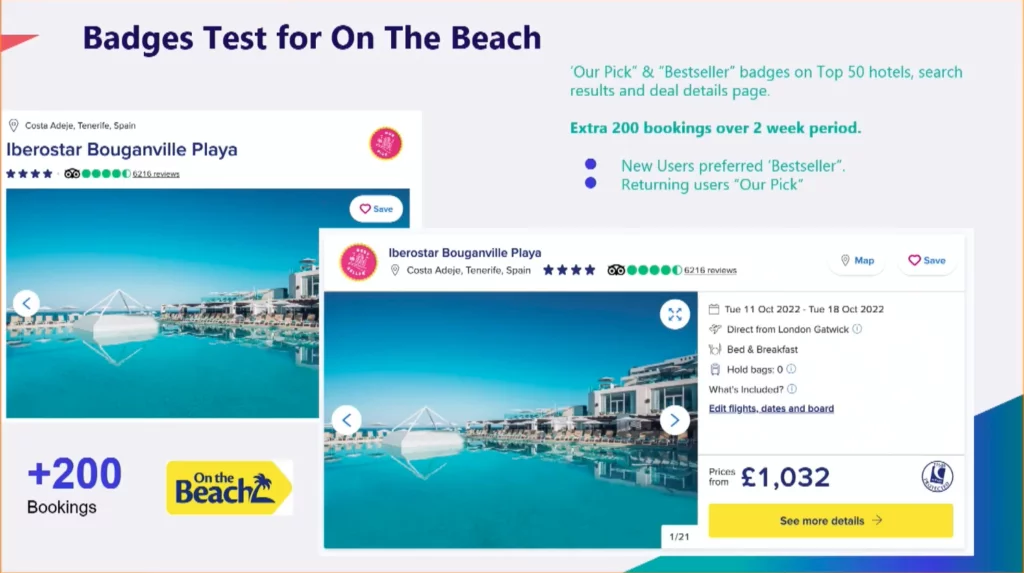

On the Beach wanted to call out the 50 top hotels their customers can book via their website. They decided to experiment by adding “Our Pick” and “Bestseller” badges to those hotels—and within two weeks saw 200 extra bookings for those hotels. So far, so great.

But what made this test particularly interesting for Alex Mclean (Conversion and Optimization Manager at On The Beach) was segmenting the test results by new visitors vs. returning users. This segmentation showed that new users converted more on hotels with the “Bestseller” badge, while returning users tended to opt for hotels adorned with “Our Pick”.

“We think this shows that new users, who aren’t yet familiar with our brand, are more swayed by social proof than our endorsement,” says Alex. “But as users return with more trust for our brand, they seek out our opinion—which is great, because it shows that our mission to be beach experts is working!”

The takeaway: Segment your A/B test results to learn about your audience

Every customer that comes to your website will be unique, with their own relationship to your brand and a particular set of needs and priorities. Unsurprisingly, our panel speakers all stressed the importance of creating journeys that cater to every customer.

“We have one website that every customer funnels through, but all of those customers will want different things—and we have to give them all a different, satisfying experience. To do that, we have to understand our customer base to push the right message at the right time for the right people.”

—Alex Mclean, Conversion and Optimization Manager at On The Beach

Plus, as Alejandra Salazar, eCommerce and Content Manager at Clarins, pointed out, serving customers with the right message at the right time doesn’t only help you satisfy them—it also signals to them that you understand them on a personal level.

Intrinsic to hitting the mark here is the segmentation of your customer base—and, as Alex’s A/B test example demonstrated to us, you can apply segmentation to A/B testing to gain a better understanding of what makes each group tick (and click).

So: segment your analytics on tests (even if those tests weren’t segmented initially), and you can discover more about your audience, and how subsequent innovations will best fit into different segments’ journeys.

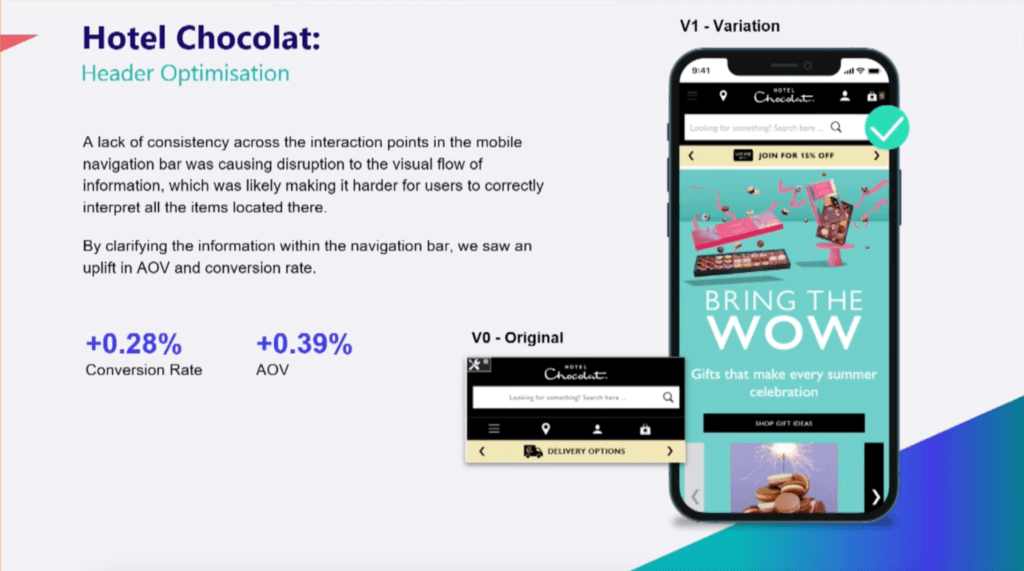

“We’re currently redesigning our entire website, but we know we can’t just launch straight into a new design,” says Gio Rita, UX and CRO Manager at Hotel Chocolat. Instead, we’re ‘unlocking’ various elements of the redesign by doing plenty of A/B tests.”

Gio then showed us an example of one of these tests: testing the placement of icons in the new website’s header, which helped increase conversion rates and unlock new opportunities for creativity on the site.

“By putting the icons above the search bar, instead of below, we freed up real estate and saw conversion rate increase by 0.28% and Average Order Value (AOV) increase by 0.39%,” says Gio. “And freeing up that real estate gives us the opportunity to experiment with other elements —and test those too.”

0.28% uplift might not seem like much, but as Devon observed, testing and experimentation is all about getting metrics moving in the right direction—not making a double-figure impact in a matter of weeks.

As well as monitoring elements of its website redesign, Hotel Chocolat is keeping a close eye on its audience to assess how they’re dealing with the impact of inflation. They’re doing this by testing to gather data on their audience’s response to, for example, surfacing personalized payment options on product description pages (PDPs).

The takeaway: Always Be Testing (especially during major change)

Hotel Chocolat’s example prompted Devon (AB Tasty) to observe that many brands make the mistake of pausing experimentation and testing when they’re redesigning their website.

“Every time this happens, I think they’re making a serious mistake,” says Devon. “I ask them: ‘How do you know what will work and where in your redesign if you’re not testing it?’”

Marketers use all sorts of initialisms as short-hand in their day-to-day: KPI, AOV, CPA, etc. Well, marketers, here’s a new one to add to your collection (and this one’s an order): ABT. Always Be Testing!

Whatever change is happening on your website (or in the wider world), you need to monitor and test to stay in touch with how your customers feel about it. And certainly don’t pause if you’re redesigning your website—test more.

3 A/B test examples showing why you should start small. As the old adage (courtesy of Lawrence of Arabia, fact fans) goes, big things have small beginnings.

That certainly applies when it comes to A/B tests. As Devon told us when AB Tasty works with clients to build a roadmap for testing and experimentation, they may have a big end goal in mind, but they always start with small steps.

“We want to know what big things the client is looking to change,” says Devon, “and what micro steps we can take to achieve those big changes. Testing is all about micro-steps and iteration.” — Devon Boyd, Channel and Alliances Director, EMEA at AB Tasty

Now here are three examples of the sort of micro-steps AB Tasty are interested in…

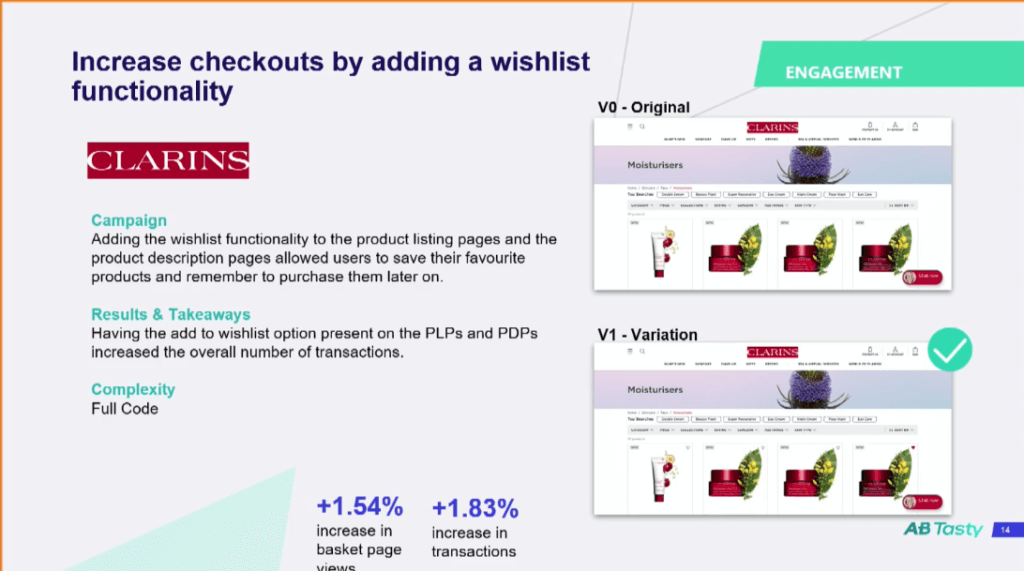

First up, Alejandra shared a test Clarins ran to highlight their site’s wishlist functionality. They added a simple heart symbol to Product Listing Pages (PLPs) and PDPs to allow users to add items to their wishlists.

“We saw a huge increase in engagement,” says Alejandra. “And not only that, but this also resulted in a 1.54% increase in basket page views and a 1.83% increase in transactions.”

On the page, it’s such a small change that you might not be able to spot the hearts Clarins have added from looking at our screenshot. But the impact of these hearts on Clarins’ metrics was significant. Proof that a little love (and a little testing) goes a long way!

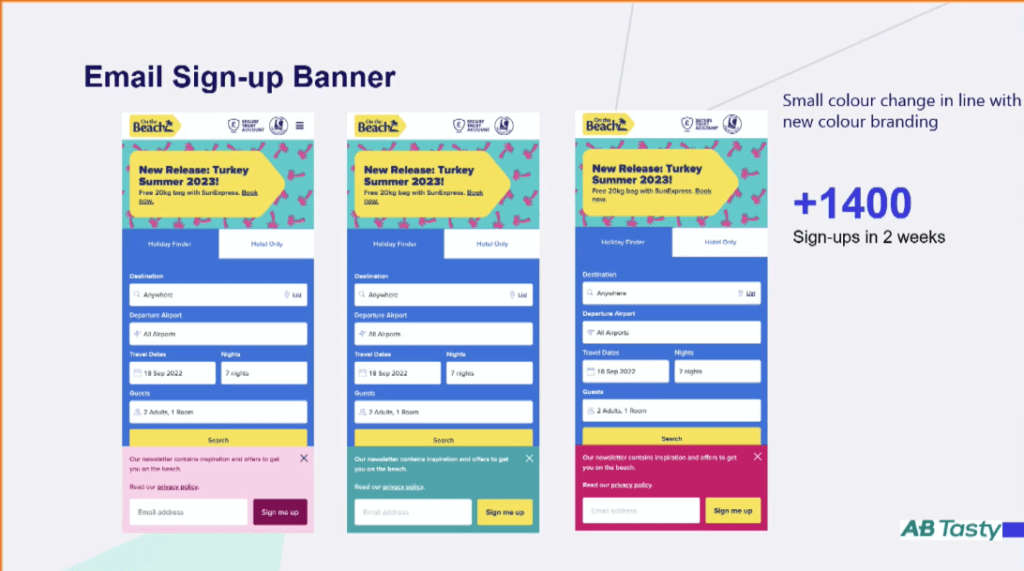

Next up, a test from On the Beach that reaffirms the power of small change—and shows the importance of visually reflecting your brand in your digital experience at every point.

As part of a bigger project to increase email sign-ups, Alex’s team ran a simple test to change the color of an email sign-up banner to fit with On the Beach’s new branding. The result? An extra 1,400 email signups across a two-week period.

“If you were to believe everything you read on LinkedIn, you might think that testing CTAs and color changes is a waste of time,” says Alex. “But this test suggests otherwise! It’s such an apparently minor thing to change, and I think this test shows that there’s a lot of value in running small tests.”

Amen to that. When it comes to digital CX, by all means, think big… But start small.

One of the big advantages of testing small things is how quickly you can run those tests and get results back—a huge bonus in a world of perpetual digital transformation where the most agile businesses thrive.

With their website redesign in full swing, Hotel Chocolat was waiting for usability survey results to come through; but in the meantime, they figured they could do some testing work in the background to validate some ideas at speed.

Gio gave us an example of one such test: converting the font in their mega-navigation bar from all uppercase to lowercase.

The theory behind this experiment was that simplifying the look and feel of the nav would reduce cognitive overload for the customer and make it easier for them to navigate and convert. And the results of the test suggest they were onto something.

“We saw a 2% increase in conversion and a massive 7.5% increase in AOV. And the test only took a few hours to set up! It was a little effort for a big reward. And it shows that while you’re running big, complicated tests, you can work away at simple tests and make a major impact.”

— Gio Rita, UX and CRO Manager at Hotel Chocolat

The takeaway: ‘Minor’ A/B tests can lead to major changes

Each of these A/B testing examples shows that, when it comes to testing, a little can go a very long way. Take inspiration from the examples above to find elements of your CX that you might be able to tweak. It doesn’t have to take long to test something that might make a big difference to your business.

As we’ve just seen, Hotel Chocolat made metrical magic happen simply by switching the format of their copy. But formatting is really just the tip of the iceberg when it comes to testing copy elements. Clarins can attest to that.

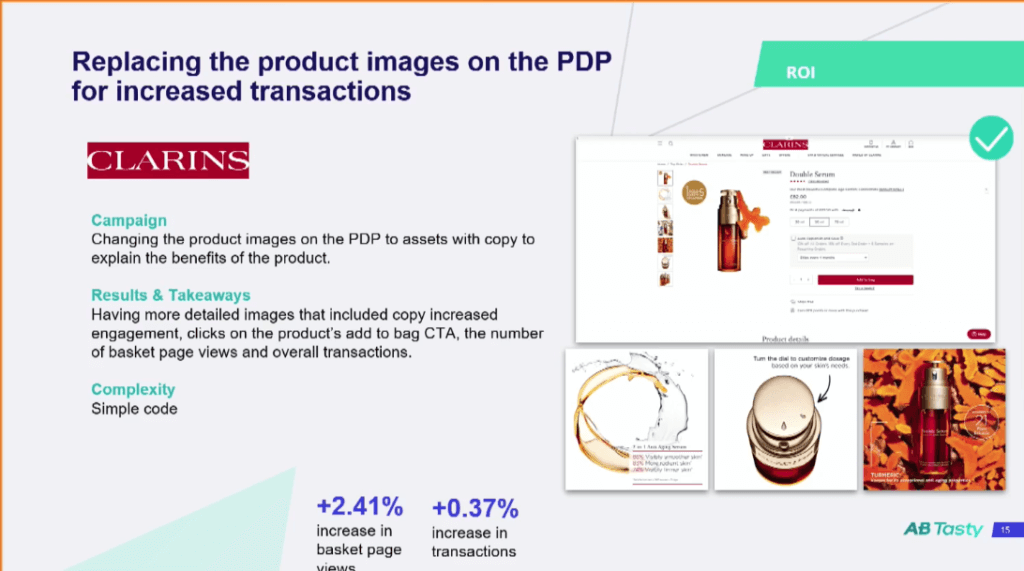

A picture can say a thousand words—but sometimes adding some words to those images can help spell things out for customers and inspire a decision.

That’s what Clarins discovered when they tested adding explanatory copy to images on their PDP pages, as Alejandra explains:

“We had a lot of information in our PDPs, but sometimes it gets a bit lost. So we decided to test out adding some copy to our PDP images, letting customers know a little bit more about the products. We even used this copy to explain how to use the more simple products. And the results we got from adding that simple copy were spectacular!”

Clarins saw a ‘huge’ increase in engagement on those PDPs with added copy—and a big knock-on effect from this on basket page views (which went up 2.41%) and even increase in transactions (up 0.37%).

While Clarins’ A/B test example was about the power of adding substance through copy (what you tell customers). Alex of On the Beach, by contrast, talked about the importance of getting your copy style right.

“We’re looking at getting more branded copy into our website right now, to replace the rather functional copy we’ve previously had in places,” says Alex. “So for us, testing isn’t just about tracking button clicks. It’s also helping us effectively weave brand messaging into our CX.”

The takeaway: Don’t forget to A/B test copy

The general rule with copy for CX is to pare it down to what’s essential—but have a think about where essential copy might be lacking from purely visual elements of your CX. And don’t underestimate the power of tone of voice. (Or the power of testing it.)

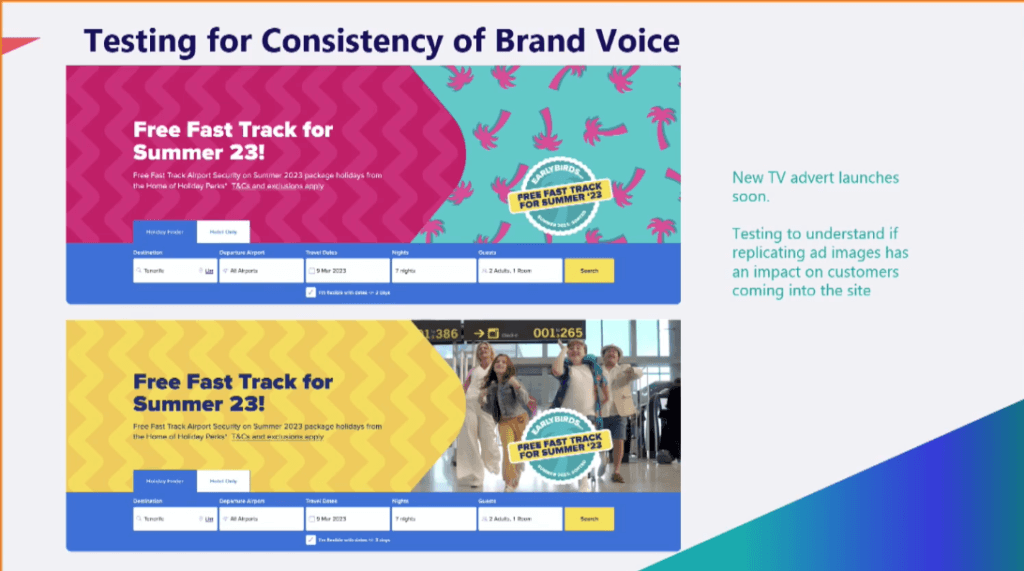

On the Beach launched an ad in January. The ad was irreverently soundtracked with a Christmas song—which annoyed some but amused and engaged plenty of others.

Now the company is getting ready to launch another seasonal advert, and Alex’s team are testing to see if replicating the advert visuals on On the Beach’s website will have an effect on the progression of customers through the website.

The takeaway: A/B test to prove the power of brand to the business

Marketers don’t need to be told about the importance of brand. Other departments, however, can be a different story: a ‘once upon a time’ with a rocky beginning, hazardous middle and sad ending. But testing can be a marketer’s knight in shining armor for proving how vital brand is.

Although the results of this test aren’t in yet, this is a great example of using testing to measure the actual impact of your brand identity on engagement. (And also acts as a reminder to connect your on and offline brand experiences wherever possible.)

We hope this blog has done for you what the CX Circle session did for us: alert you to the flexibility of testing, and the potentially endless possibilities that come with testing every conceivable aspect of your digital experience.

Of course, to run effective A/B tests like those we’ve highlighted above, you’re going to need access to high-quality analytics data—data, ideally, that allows you to see not only what customers are clicking on and viewing, but how exactly they’re making their way through your website and app. (And that includes what they’re doing between clicks.)

Every experiment is a hypothesis, and while an uptick or downturn in metrics can tell you whether or not what you’ve done is ‘working’, it won’t necessarily tell you why it’s working. For that, you need extra evidence.

The sort of evidence that shows you where customers are scrolling, where they’re rage-clicking, where they’re converting and where they’re bouncing—even to the extent of letting you watch individual user sessions to see exactly where things have gone right or wrong.

For that, you’re going to need a digital experience analytics platform (DXP). More specifically: you’re going to need Contentsquare, the only DXP that gives you rich and contextual insights across the entire customer journey and leverages machine learning to bring both UX and technical issues to your attention and prioritize the most pressing CX elements you need to act on.

Get a demo Request a personalized demo with a digital experience expert!