Users don’t always interact with designs the way we expect them to. A missing label, broken tab order, or the lack of screen reader support can block someone from completing a task in your product, app, or on your site.

Digital accessibility testing helps you find and fix these issues before they affect users, ensuring your user experience (UX) remains consistent for people with different needs, devices, and abilities.

This guide walks you through what accessibility testing involves, the types of tests to consider, and how to build it into your everyday workflow (even if you’re not an expert).

Key insights

Accessibility is contextual. Barriers don’t just affect people with permanent disabilities, but also appear when someone is tired, distracted, holding a baby, or squinting at a screen in sunlight. Testing makes your product more usable for everyone.

Data connects empathy to action. Friction signals like rage clicks and drop-offs show where people struggle, while surveys and user tests reveal why. Together, they give teams the evidence and human stories to prioritize accessibility fixes.

Every test removes a barrier. You don’t need a massive audit to start. A single keyboard walkthrough, a screen reader session, or one round of user feedback can uncover issues that make or break someone’s experience.

What is digital accessibility testing?

Digital accessibility testing is the practice of checking how people with different needs, abilities, and devices experience your digital product. It applies to websites, apps, software platforms—anywhere a person interacts with a digital interface—and looks at whether they can use it without accessibility barriers.

Barriers show up in many forms: a form field a screen reader can’t identify, a button that can’t be reached with a keyboard, or instructions that disappear too quickly to read. These are the moments that stop someone from completing a task.

Passing every checkpoint in the Web Content Accessibility Guidelines (WCAG) doesn’t guarantee accessibility either. Those standards, published by the World Wide Web Consortium (W3C), provide a foundation, but real testing ensures that people can actually use your product.

Testing helps close that gap, showing whether your digital experience truly works for the people it’s meant to serve—and giving your team the resources you need to build an accessible website or product.

How to test digital accessibility in 7 practical steps

Accessibility has to be built in from the start, and testing is where that commitment becomes real. If you treat it as a one-off checklist, that process can feel overwhelming. A better approach is to break it into practical steps you can apply regularly.

Here are 7 essential steps to start testing in ways that make a difference.

1. Determine your testing goals

Digital accessibility testing looks at how people with visual, cognitive, auditory, and motor needs use your product, without relying on extra help. To do that, you need to be clear about the accessibility scenarios you’re checking.

Ask simple, task-focused questions of your digital product, such as

Can someone navigate this interface without a mouse?

Will a screen reader correctly describe what this form field is for?

Is the layout still readable when zoomed to 200%?

Can someone complete a task while managing reduced vision, memory load, or distractions in their environment?

These practical, human-centered questions reflect real situations users face every day. Clear goals make your testing more meaningful and help you focus on what actually impacts people’s ability to succeed.

2. Choose your accessibility testing methods

Every testing method shines a light on a different barrier. Some pick up code-level issues, others show you how tools handle your digital content, and only real people can surface the frustrations that software can’t predict.

The strongest strategy combines digital accessibility tools and methods, ensuring your product holds up in real-world use.

Testing type | What it does | Example | When to use it |

|---|---|---|---|

Automated testing | Scans for code-level issues quickly, though not comprehensively | Automated tools like Axe, Wave, or Lighthouse scan for contrast errors, missing labels, or heading order | During development or QA |

Manual testing | Helps you test flows with just a keyboard to catch nuance and usability issues | People test tab order, keyboard-only navigation, or visible labels to spot focus traps and confusing paths | Pre-launch QA |

Assistive tech testing | Checks whether content is compatible with the accessibility tools users depend on | Screen readers (JAWS, NVDA, VoiceOver), magnifiers, and switch devices show you how content performs under real assistive tech | During design and QA |

Real user testing | Surfaces barriers that checklists and scans miss | Platforms like Contentsquare let you invite people with disabilities to complete key tasks and share their experience | Design validation and audits |

Each test removes a barrier. The more you address, the more usable and welcoming your digital product becomes.

3. Add accessibility checks as you develop your product

Accessibility testing is most effective as part of the product’s DNA from the very start, not just at the end of a sprint. Leaving it until the last stage makes issues harder, slower, and more costly to fix.

Instead, weave testing into each development phase:

In design, check color contrast, compatibility across devices, font legibility, and visual hierarchy with every new component

During development, run automated checks to confirm tab order, labels, and accessibility rules before merging code

In quality assurance (QA), add accessibility checks to your standard release tests, like screen reader validation and keyboard-only navigation

Post-launch, monitor how real people interact with your product. Look at where they get stuck or drop off, and pair those signals with direct feedback to see what’s working and what still feels inaccessible.

When designers, developers, QA testers, and product teams all treat accessibility as part of their shared workflow, it doesn’t feel like extra work. It just becomes the way the team builds. Each release makes the product a little stronger and a little easier for more people to use.

💡 Pro tip: accessibility issues often reveal themselves in behavior data.

Contentsquare’s Experience Analytics capabilities give you visibility into these signals so you can spot barriers faster and prove their impact:

Heatmaps highlight where people rage click, struggle, or hit dead ends. These frustrations may come from poor labeling, unclear instructions, or controls that aren’t usable with assistive tech.

Journey Analysis lets you compare the paths of users who complete tasks with those who drop off. If you see higher abandonment among users on certain devices or assistive technologies, that’s a signal that some tasks are unnecessarily difficult for certain users.

Session Replay shows you how those accessibility issues play out in real time. Watching a user get stuck on a form or lost in navigation turns abstract accessibility issues into identifiable user struggles, giving teams a clear reason to act quickly.

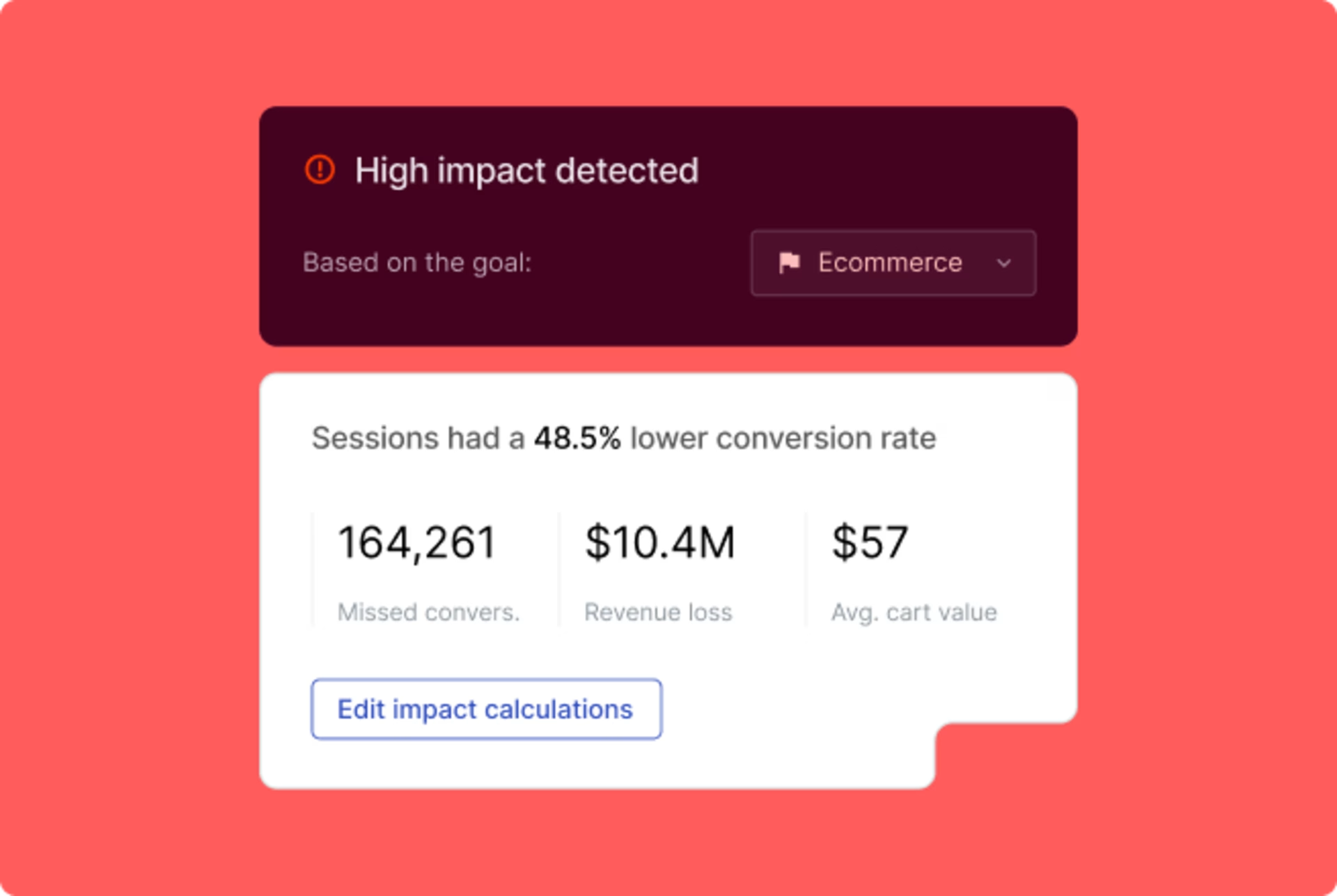

Impact Quantification translates these barriers into numbers—how many people are affected, and how that impacts conversions or revenue. That evidence helps ensure accessibility fixes receive the priority they deserve.

Together, these insights connect empathy to action. They remind us that behind every drop-off or error is a person trying to achieve something, and accessibility testing is about giving them the chance to succeed.

Impact Quantification uses behavioral data to reveal both sides of the story: how people use your product when it works, and what happens when it doesn’t

4. Validate your product with real users

Automated accessibility testing is helpful, but these tools only catch around 30–40% of accessibility issues. They can point out missing labels or contrast errors, yet they can’t tell you when something feels confusing, overwhelming, or broken. That kind of insight only comes from real people.

Human feedback reveals the issues that surface under specific conditions, like when someone uses VoiceOver in Safari to complete a checkout.

It brings attention to the moments when the markup is technically correct, but the experience is still disruptive, or the ways an interface can confuse or overwhelm someone with cognitive or sensory differences.

To make this feedback part of your process, use Contentsquare’s Voice of Customer (VoC) capabilities. For example,

Conduct User Tests to observe how people complete tasks in their own environment. Use them to pay particular attention to barriers you might never see in lab conditions.

Launch Surveys to ask testers questions like “Was anything difficult to navigate?”. This helps you notice and understand accessibility issues as they happen.

Use Interviews to talk through their experience and understand both the issue and the frustration behind it

Pairing survey responses with interviews or session replays gives you a clearer picture of the accessibility issue

💡 Pro tip: the best accessibility testing feedback comes from people who actually use assistive tech every day.

Contentsquare impact initiatives, such as the Contentsquare Foundation and Accessibility Lab connects your team with inclusive research panels, so you can run task-based tests with real users and see firsthand how your product holds up in real life.

Pairing real-user testing with guidance from accessibility experts gives teams both the empathy and the practical direction they need to act on what they see.

5. Run a structured accessibility audit

Accessibility audits help you go beyond spot checks and create a clear roadmap for improvement. Instead of fixing issues as they pop up, an audit gives you a structured view of where barriers exist, how severe they are, and what to fix first.

The idea is simple: pick a key journey, layer different types of testing, and document what you find in a way your team can act on.

Take a checkout flow as an example. A structured audit might look like this:

Step | How to test it | What you might find |

|---|---|---|

1. Choose the journey | Pick a high-stakes flow to test | Focus on checkout, since it’s critical for both users and the business |

2. Run automated scans | Use tools to flag common issues | A missing form label on the payment page in Chrome confuses screen readers |

3. Layer in manual testing | Try the flow with just a keyboard | People can’t reach the ‘Continue’ button without a mouse |

4. Add assistive tech tests | Use screen readers and magnifiers | Shipping options aren’t announced correctly by a screen reader |

5. Gather real user feedback | Invite participants to test and reflect | A user notes webpage error messages disappear too quickly to read |

6. Document findings | Log details, severity, and next steps | List each issue, mentioning its impact and suggested fix |

7. Prioritize fixes | Focus first on blockers | Fix the missing label, inaccessible button, and shipping options before secondary issues occur |

💡 Pro tip: the real value of an accessibility audit is in pointing out the blockers that matter most. Focus on the problems that stop people from completing key tasks, and give teams clear next steps. That way, fixes actually happen, and accessibility keeps improving release after release.

6. Track progress with accessibility metrics

The real measure of success isn’t how many issues you fix, but how those fixes improve the experience for people using your product.

By tracking meaningful accessibility metrics, you can see whether changes are actually making your product easier to use and more inclusive over time.

Some useful signals to measure include

Fewer high-friction interactions: a drop in rage clicks show that tasks are getting easier

Improved user sentiment: survey results that point to clearer navigation or more usable forms

Faster resolution times: accessibility-related bugs move quickly from report to fix

Higher pass rates in assistive tech testing: screen reader and keyboard flows work more consistently

Positive feedback trends: comments in on-site surveys shifting from frustration (“I couldn’t complete this step”) to trust (“This was easy to do”)

💡 If you’re using Contentsquare

Set up a simple product or website accessibility dashboard so progress is visible to everyone.

Start by including a Journey Analysis widget to track drop-off rates on key flows before and after fixes

Then, layer in Impact Quantification to show how much each resolved accessibility issue boosts conversions or reduces abandonment

Finally, pair these with VoC surveys that ask about clarity and ease of use

Review the dashboard each sprint to see both the hard numbers and the human feedback that prove accessibility changes are making a real difference.

Dashboards help you streamline reviews and track whether accessibility improvements are reducing friction

7. Make digital accessibility testing part of your culture

Accessibility isn’t something you cross off the list and move on from. Products evolve constantly—new features are launched, designs change, and code gets updated.

Every release is a chance to either keep accessibility strong or accidentally let barriers slip back in. The difference comes down to culture.

When accessibility is woven into the way teams work,

QA teams don’t just verify functionality, but also ensure features meet basic accessibility standards before sign-off

Analytics dashboards surface early warning signs, with automated alerts for spikes in rage clicks, exits, or drop-offs, so you know immediately if a release introduced new blockers

VoC insights turn repeated frustrations into roadmap priorities, guiding fixes by human impact, not guesswork

💡 Pro tip: some fixes take time. Accessibility tools like Readapt.ai use a simple browser extension to offer immediate support by adapting digital experiences in real time. This allows users with visual, motor, or cognitive impairments to complete tasks in the moment, while your team works on longer-term improvements behind the scenes.

Inclusive design starts with inclusive testing

Every person who uses your product brings their own context. Accessibility testing is how you make space for all of them.

Start small: test one flow, invite one person to try it, watch one screen reader session. Then take what you learn and build on it.

Each time you do, you’re removing a barrier for someone who just wants to get something done. Those moments of care add up, and together they create products that feel open, welcoming, and fair.

FAQs about digital accessibility testing

An audit is a deep, structured review against accessibility standards. In the US, that means complying with requirements like Section 508 and the Americans with Disabilities Act (ADA). Globally, teams often follow the World Wide Web Consortium’s WCAG 2.0 guidelines, which set the benchmark for accessible digital design.

An audit usually covers a wide scope and documents issues with severity levels. It also provides remediation guidance: a detailed list of fixes and recommendations that show teams how to correct accessibility problems.

Accessibility testing can (and should) happen more frequently and flexibly. Designers, developers, and QA teams can run checks throughout their workflows to catch issues before they pile up and ensure equal access to key tasks like sign-ups, checkouts, or content downloads.

![[Visual] Data connect homepage stock image](http://images.ctfassets.net/gwbpo1m641r7/5U9V0n65sv32f3jWWgZcc7/2b4990d3fc560a6b38f7bd137a078c46/AdobeStock_283571591.jpeg?w=3840&q=100&fit=fill&fm=avif)

![[Visual] Foundation Video logo](http://images.ctfassets.net/gwbpo1m641r7/5tQVDxwAThmlVCqeQBPuqz/b2a633261da0b471fe29791099dd52e0/Foundation_Video_logo.jpg?w=1920&q=85&fit=fill&fm=avif)

![[Visual] Contentsquare's Content Team](http://images.ctfassets.net/gwbpo1m641r7/3IVEUbRzFIoC9mf5EJ2qHY/f25ccd2131dfd63f5c63b5b92cc4ba20/Copy_of_Copy_of_BLOG-icp-8117438.jpeg?w=1920&q=100&fit=fill&fm=avif)