User testing is often that ‘must-do’ task on your product checklist that gets ignored because there are so many different opinions on exactly what to test, which methods to use, and the different steps involved.

For example, how do you know which challenges warrant a full-blown A/B test and which just need a customer interview? And what are the results you can expect from a user test?

Analyzing successful user testing examples helps you answer all these questions.

This chapter looks at 6 real-life examples of how businesses successfully conducted user testing and the results they achieved. We also give you advanced tips on how you can emulate their practices to overcome your product optimization challenges.

Plus, we give you a free downloadable user testing checklist so you can align your objectives, assign tasks, and get started right away.

6 real-life user testing examples to learn from

Seeing user tests applied to real-world challenges helps you understand the depth of your own user experience (UX), so you can validate assumptions about user behavior and preferences and make effective product decisions.

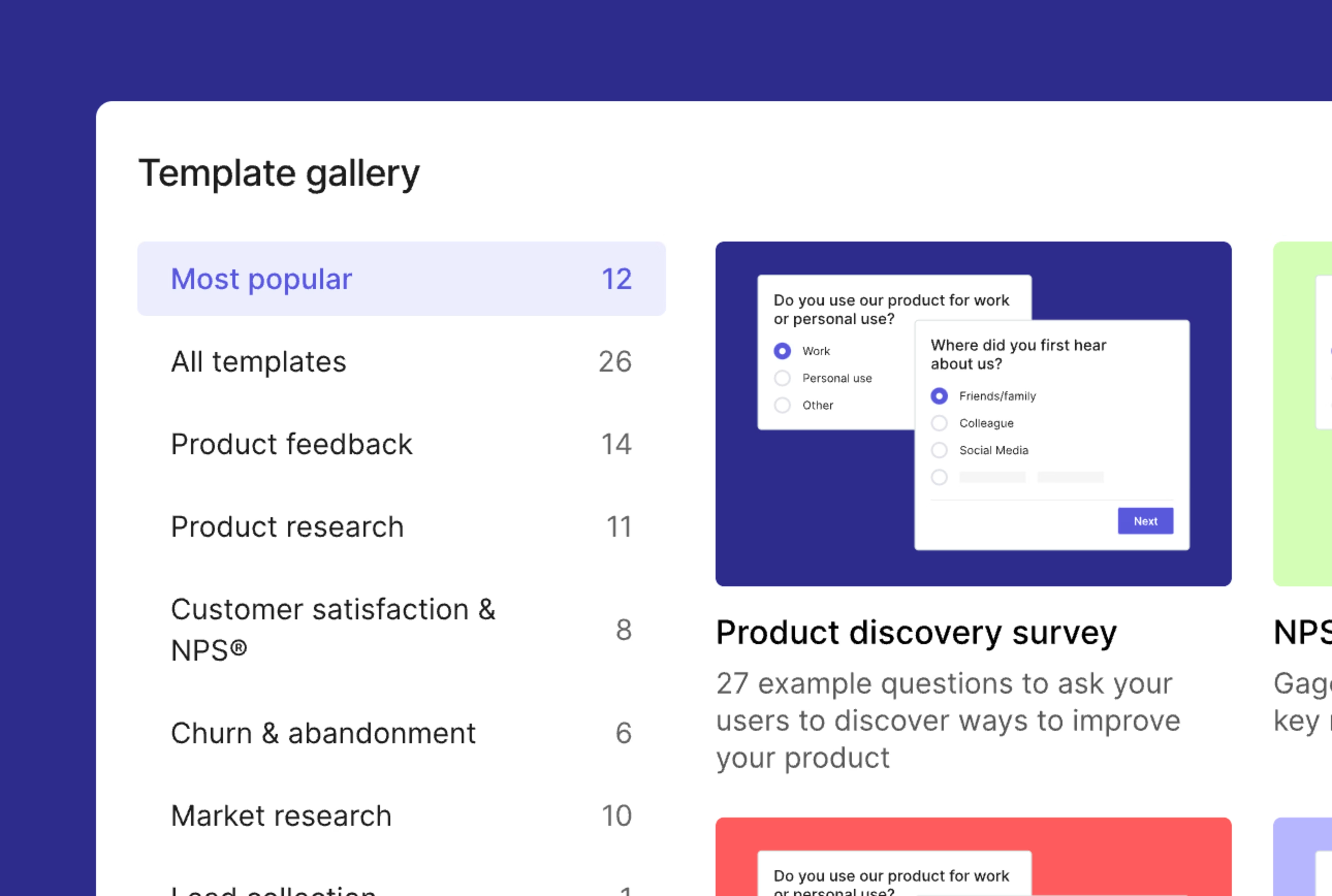

Before we dive into the examples, let’s first take a look at the most popular user testing methods and tools out there today:

User tests: capture genuine insights as users interact with your website or product without the guidance of a moderator, on their own time

Surveys: directly ask your users how they feel through strategically crafted survey questions and polls

Interviews: conduct one-on-one interviews to understand the nuances behind your users’ opinions

Focus groups: gather a group of users together to discuss and provide feedback on your product or service

A/B tests: test two different versions of your product to see which performs better with your users

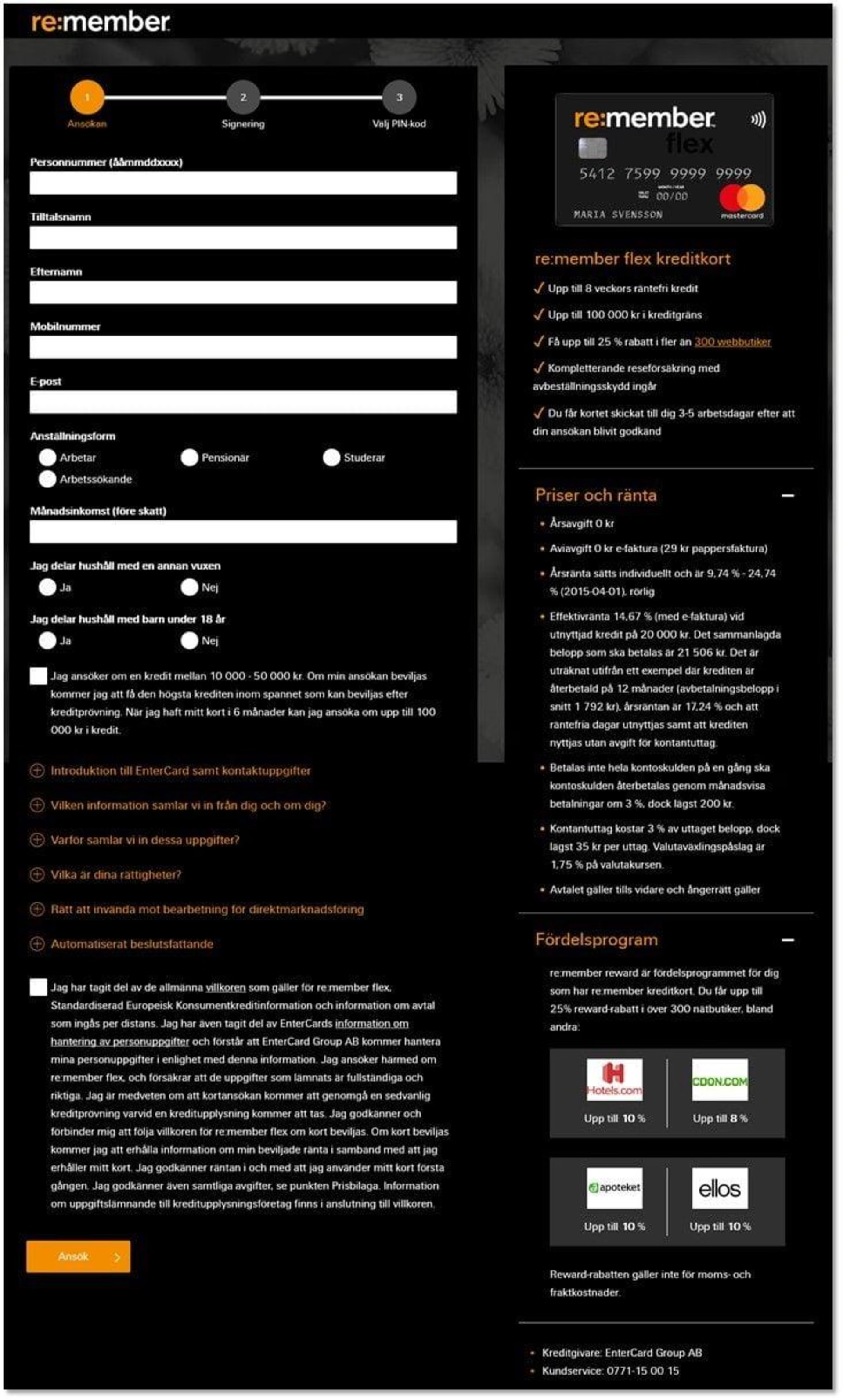

Heatmaps: analyze user behavior on your website or web app by visually mapping where they click and spend time

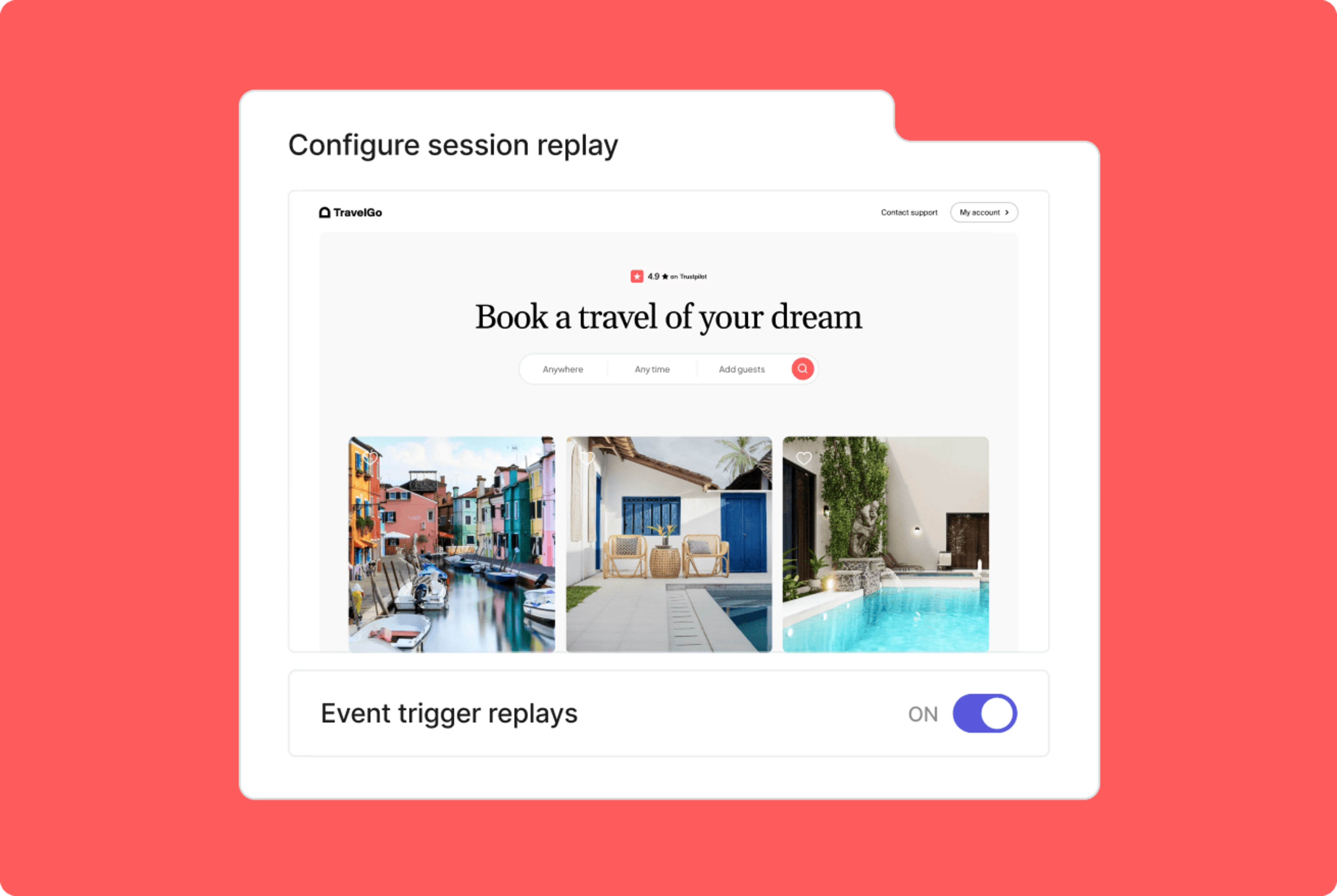

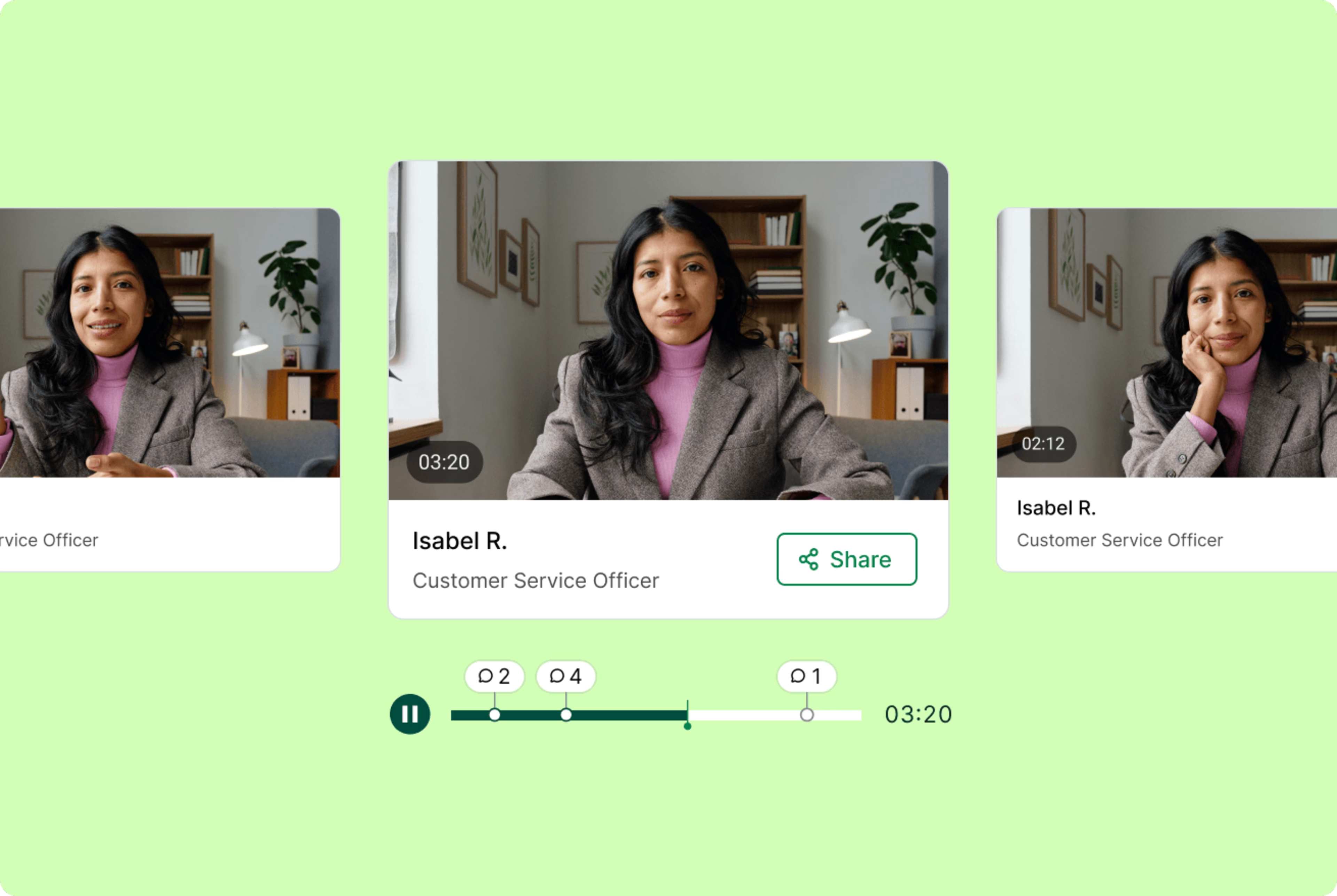

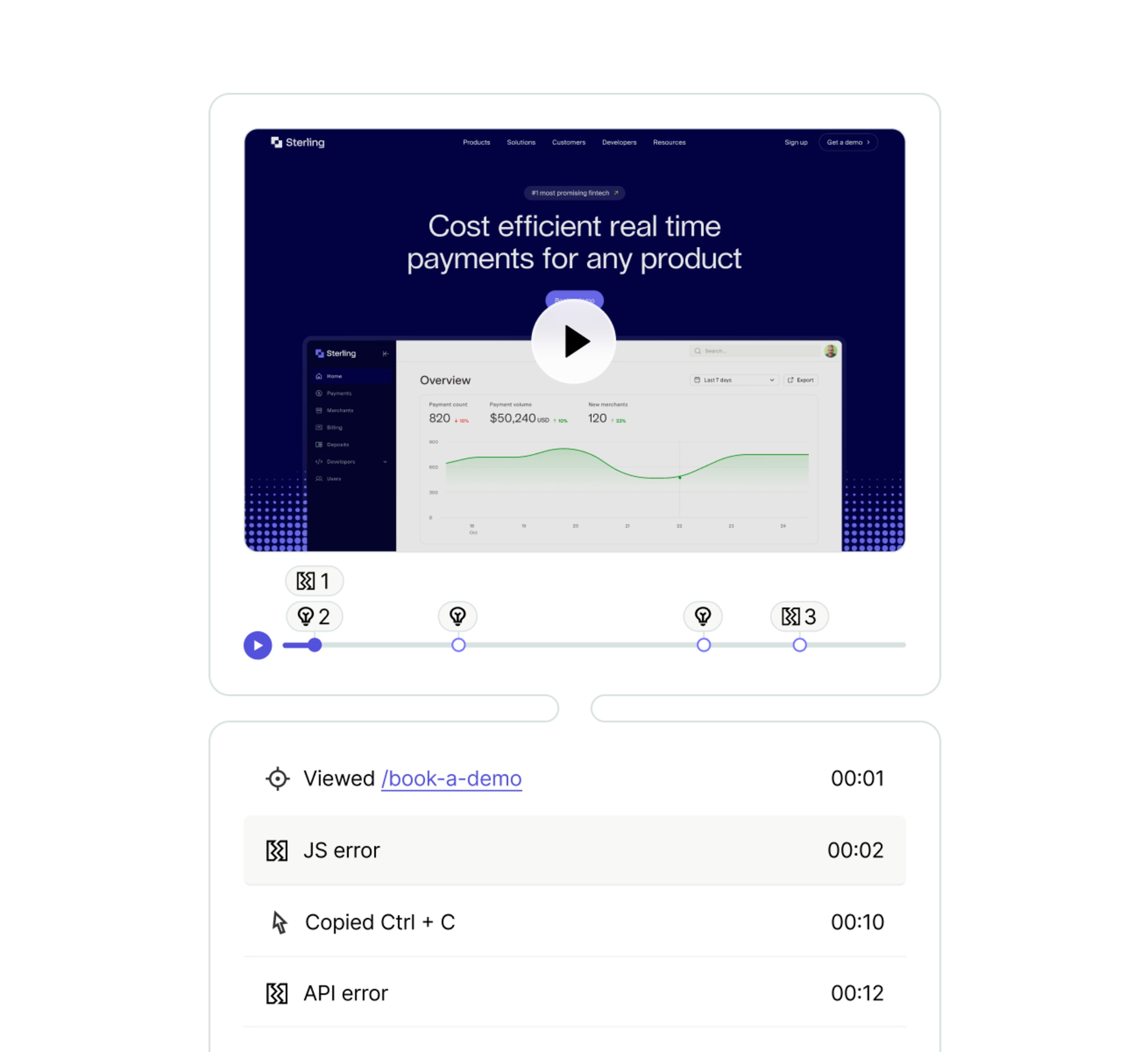

Replays or recordings: review and analyze user interactions with your product to identify pain points and opportunities for improvement

Now, let's explore how six companies used Hotjar’s digital experience insights tools to conduct user testing and improve UX.

1. How Bannersnack increased landing page sign-ups and feature adoption

Bannersnack, an online banner-making tool, was in a tough spot: web analytics had alerted them to low conversions, so they knew they needed to do something to boost their metrics, but had no insight into how people were using their tool and why they weren’t taking action.

1. Heatmaps and A/B testing

Bannersnack turned to user testing to make sense of their quantitative data. They first analyzed heatmaps to understand which elements users were engaging with or ignoring on their landing pages. They learned that the majority overlooked their main call to action (CTA), so the team enlarged and added contrast to the button to capture people’s attention more.

They then A/B tested the different CTAs and confirmed that users responded better to the landing page with the bigger and more prominent button.

By implementing the changes that heatmaps and A/B tests highlighted, the team increased sign-ups by 25%.

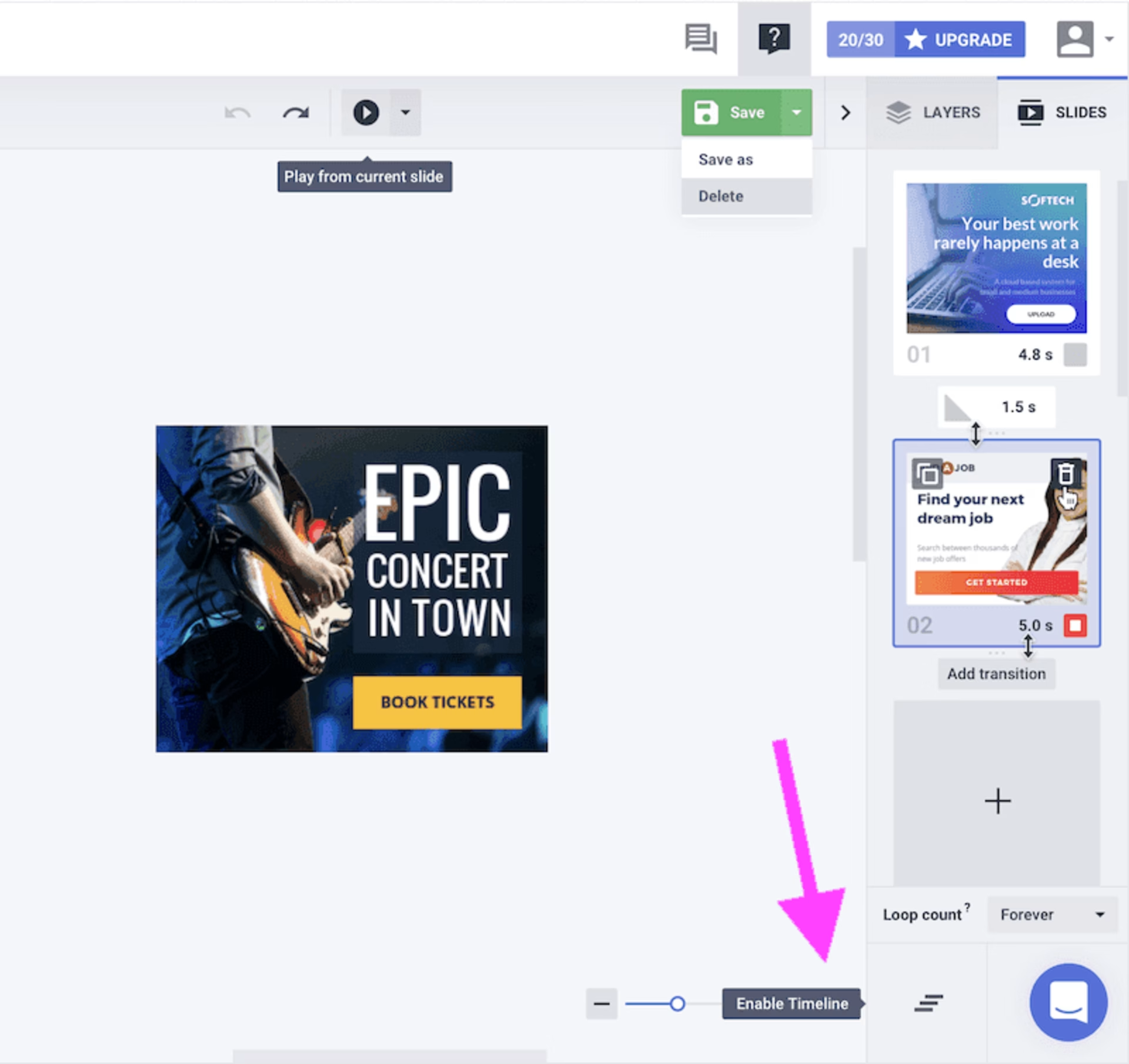

2. Session recordings

After watching several session recordings, the Bannersnack team realized many customers were overlooking their new ‘Timeline’ feature—it wasn’t quite as visible to users as the designers had initially hoped.

The fix was simple: they added the word 'Timeline' to a button and repositioned it slightly.

This easy change drove up signups by another 12%.

🚨Key takeaway: observe how your users engage with your product by using tools like session replays and heatmaps to ensure important elements like CTA buttons and features are crystal clear and visible.

💡Pro tip: segment and analyze your replays to discover user frustrations or pain points. For example, you can analyze Contentsquare’s session replays by:

Rage clicks: users repeatedly clicking over an element that could indicate a website bug, a broken link, or an image that they think is clickable but isn't

U-turns: people who navigate to a page then quickly return back to the previous one

2. How Doodle boosted conversions by 300%

Doodle, a meeting scheduling tool, was suffering from inconclusive and often misleading in-tool data, which frustrated the team because they didn’t know how to optimize the product to increase adoption. So they decided to conduct user tests and see what their tool was missing.

1. Recordings

Using recordings, Doodle’s team observed users connecting empty calendars instead of real ones to their accounts—an insight that was absent in Doodle’s data.

2. Surveys

Doodle also used surveys to ask open-ended questions about their features and the overall user experience, so they could understand what their customers truly loved about the tool.

Acting on the insights from user testing by making it easier for people to connect calendars and focusing on popular features, Doodle increased conversions by 300%.

🚨Key takeaway: instead of relying on your gut and making assumptions about what your users want, ask them—and validate your product decisions.

💡Pro tip: use Feedback Collection and Surveys to gather real-time feedback from users as they interact with your website and directly ask them questions like

How easy was it to navigate our website and find what you were looking for?

How satisfied are you with your overall shopping experience on our website?

What could we do to improve your experience?

What questions or concerns were not addressed during your shopping experience?

![[Visual] Feedback button - How would you rate your experience](http://images.ctfassets.net/gwbpo1m641r7/6zpie5F6Gwd4oyqXaxBfcN/b7e9b7f3bfcc6265f47b5294d8fec319/Feedback_button.png?w=3840&q=100&fit=fill&fm=avif)

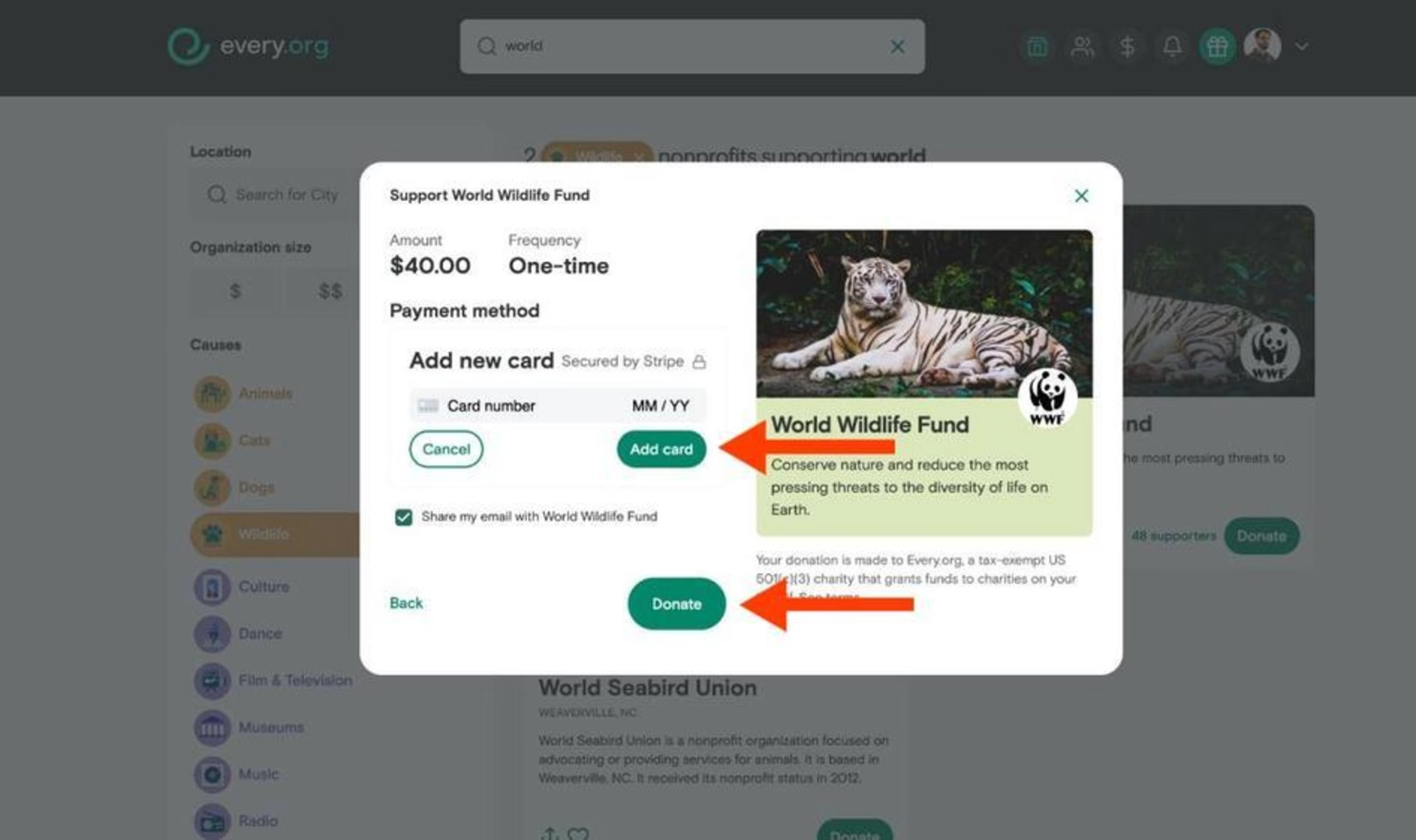

3. How Every.org increased donations to charities by 29.5%

Every.org, a donation platform making it easy for people to donate and for nonprofits to collect their donations, had a problem: donors were struggling with the process of making donations, and abandoned the site as a result. Dave Sharp, Every.org’s Senior Product Designer, set out to discover exactly what was causing friction, turning to user testing for assistance.

1. Interviews

Dave first sat down with users to ask them questions about how they felt about different website elements.

While he learned a lot through these in-person discussions with users, he was aware of potential bias: users tend to try to 'say the right thing' to please the interviewer, skewing results. To complement and validate these insights, he needed objective data.

2. Session replays

By filtering his session replays by URL, Dave could then watch them by relevance to gain clarity on the qualitative insights from interviews. He saw that

Users were rage-clicking on the ‘donate’ button

Very few users were first adding their card details and then proceeding to donate (which was the intended user flow) because the two buttons were parallel

Since adding the card was a regulatory requirement, Dave redesigned the flow to proceed to the ‘donate’ page only after users uploaded their card details. He also added an FAQ section to help users understand what donating with Every.org meant in terms of fees and taxes.

3. A/B tests

Dave then A/B tested the new flow against the previous one to see the impact of the redesign.

Recordings from the A/B test showed that users had stopped rage-clicking and were actively interacting with the FAQs before proceeding to donations.

Both changes were successful, leading to a 29.4% increase in conversions for Every.org.

🚨Key takeaway: ensure your user flows and customer journey are obvious and that users know what they have to do next to complete an action—like checking out. Don't just assume everything is going well—conduct a 1:1 interview where you watch users navigating your website, so you can see exactly where they stumble and ask follow-up questions.

💡Pro tip: use Contentsquare to schedule and run interviews with your own users or access Contentsquare’s pool of more than 200,000 testers. You can schedule interviews with users from different nationalities and job titles.

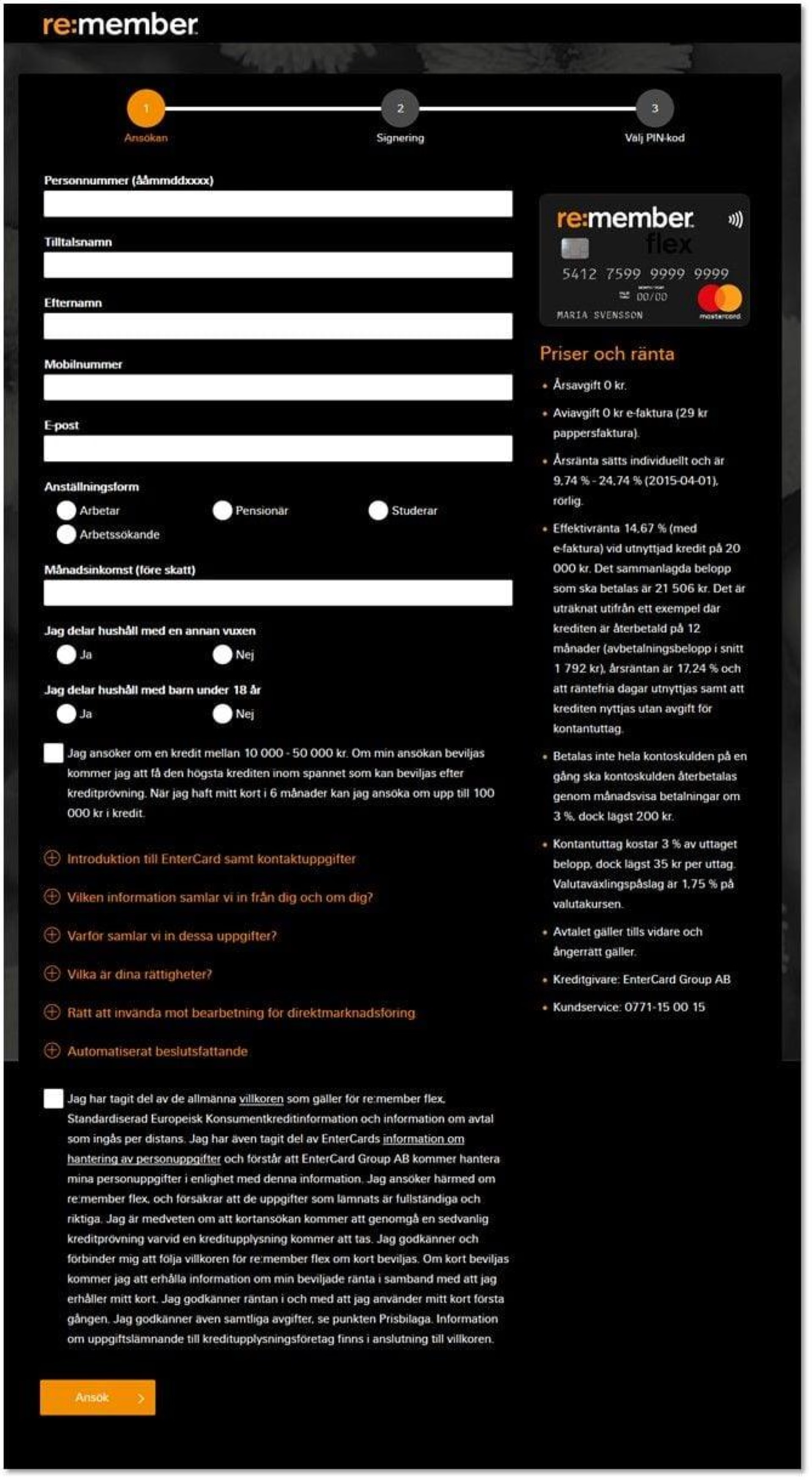

4. How re:member increased affiliate conversions by 43%

Steffen Quistgaard, Senior Marketing Specialist for re:member, a credit card company, noticed visitors from affiliate pages abandoning their credit card application form more often than usual.

Steffen wanted to find out exactly why the application was confusing people, so he conducted user tests.

1. Recordings

After reviewing session recordings or replays, Steffen noticed users were

Repeatedly hovering over the benefits section

Scrolling up and down instead of filling out the form

He concluded users were behaving this way because they wanted to learn more about the benefits but didn’t know how. So he turned to click heatmaps for more insight.

2. Click maps

After Steffen examined click maps, a category of heatmaps that show the number of clicks in a specific spot, he saw that users were

Tapping repeatedly on the benefits section even though it was unclickable

Trying to expand the bullet points (to reveal more details)

Navigating back to the homepage to get more info

Putting together all these insights from recordings and click maps, Steffen realized that users (especially those from affiliate links) wanted more information about the application’s benefits before they could make a decision.

Steffen and his team then redesigned the forms to add information in a way that was visible, accessible, and referable

On mobile, they moved the ‘benefits’ section to the very top

On the desktop version, they created expandable fields for more details on features

3. A/B tests

Steffen’s team then A/B tested the new and old pages to validate their insights. Since they could watch recordings of the pages they were A/B testing, they soon noticed that visitors had more clarity and moved faster on the new version.

As a result of the redesign, re:member’s conversions from affiliate links improved by 43% and 17% overall.

🚨Key takeaway: ensure your users understand your product’s value—the benefits they’ll get from adopting it—and place that information in prominent locations on your page. Use scroll maps and click maps to ensure users are seeing this key information. 💡Pro tip: use Contentsquare filters to segment users by criteria such as by country or new vs. returning to develop a nuanced understanding of user behavior.

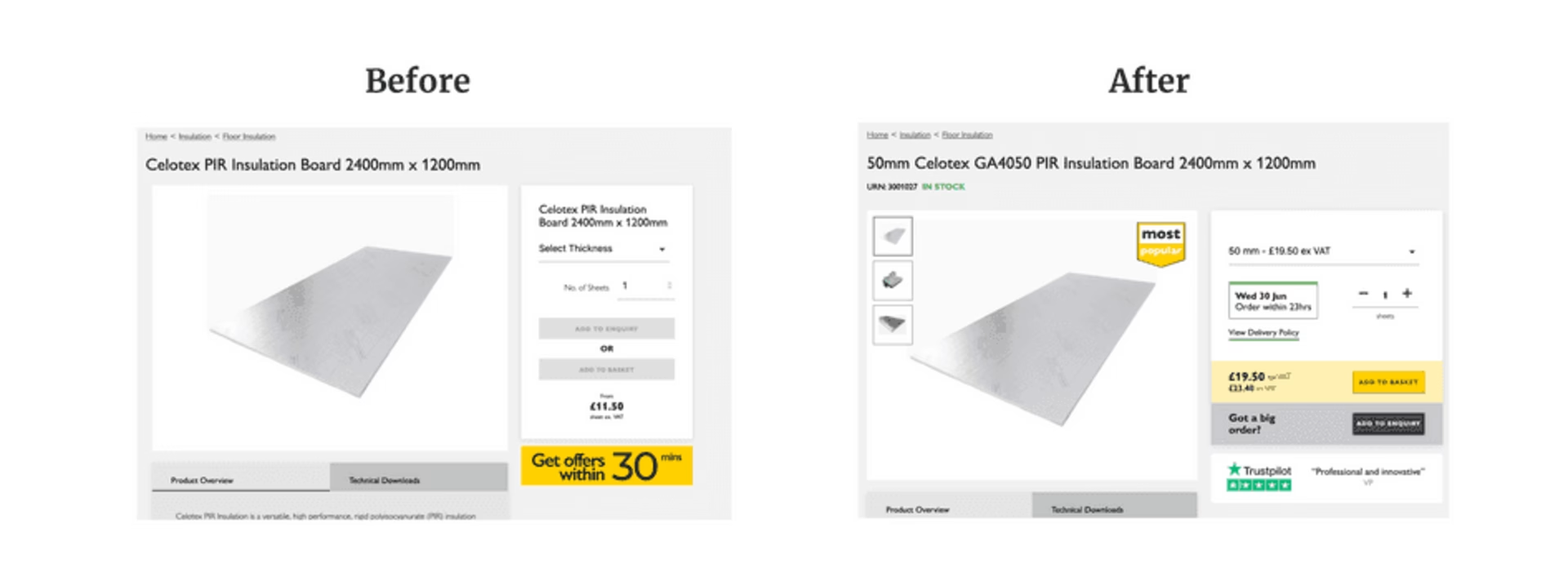

5. How Materials Market improved CTA clicks

The team at Materials Markets, a portal for material buyers and suppliers, noticed they had a high bounce rate and wanted to identify blockers in the user experience.

In particular, they wanted to see how their customers were interacting with the newly released ‘Instant Purchase’ option.

1. Recordings

Andrew, the co-founder, watched session recordings every day for 20 minutes to see how users were behaving on the site and realized people were

Confused by multiple CTAs as they were hovering over the buttons before dropping off

Navigating to checkout to see delivery times and then back

2. Heatmaps

The team also reviewed heatmaps for both desktop and mobile devices. The heatmaps for mobile users showed that people didn’t scroll far enough down the page to see their CTA.

![[Visual] Heatmaps & Engagements](http://images.ctfassets.net/gwbpo1m641r7/7q4KlAZp8BvTjZLKa3yf5T/ad3512811b1c7a0b1c9d6846968c14d1/Heatmaps___Engagements.png?w=2048&q=100&fit=fill&fm=avif)

With the knowledge from heatmaps and recordings, Materials Market

👉 Changed the color of the two CTAs so they looked different

👉 Moved the estimated delivery date to the page before checkout

👉 Raised the CTA higher up the page on mobile

As a result, their conversions increased from 0.5% to 1.6%.

🚨Key takeaway: ensure your primary CTA is above the average fold on your website, so users don’t have to scroll down the page to see it. Also, don’t neglect your mobile experience when testing your product—60% of all internet users access the web through their mobile devices—to ensure your design is responsive.

💡Pro tip: use Contentsquare’s concept testing capability in Voice of Customer to validate your new initiatives and designs, without spending money on A/B tests. Do this by adding images to surveys across your web pages and invite user feedback on elements like logos, messaging, color palettes, homepage layouts, or ads.

6. How DashThis increased customer satisfaction by 140%

DashThis, an online report-making tool, was losing customers in the onboarding flow, but they didn’t have a clue as to why.

This was the precursor to an even bigger problem: if users didn’t complete the first 2 steps, their reports showed up empty—shattering customer satisfaction.

The team turned to user tests to find exactly why users were struggling.

Surveys and recordings

DashThis used surveys to ask people questions about the onboarding flow and then analyzed the relevant recordings of the users who had responded. They learned

Users didn’t know where to click to add integrations (buttons were either not prominent or completely invisible)

The list of integrations was unsegmented and confusing

To fix these issues, the team added a search bar to the integrations’ list and incorporated pop-ups, videos, and different types of content to guide the user step-by-step through onboarding. They also redesigned buttons, making them bolder and more visible on small screens.

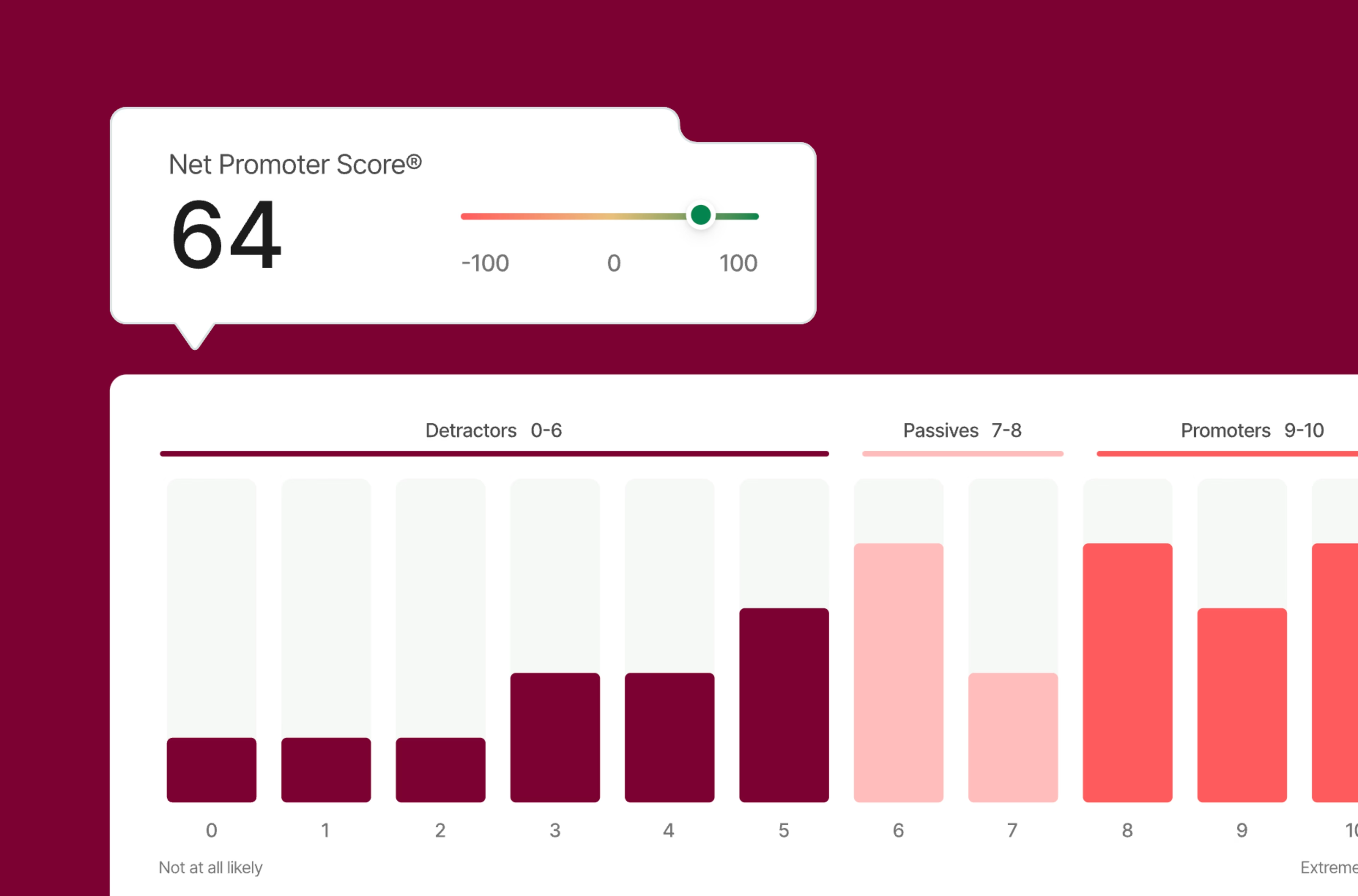

As a result, 50% more users completed onboarding and DashThis’s customer satisfaction saw a 140% increase, according to results from a Net Promoter® Score survey.

🚨Key takeaway: is your website really as clear as you think it is? Do people have the resources they need to easily complete tasks with your product? Ensure your users have all the information they need to complete their jobs to be done by asking them with interviews, a feedback widget, or surveys.

A 10-step checklist to successfully conduct user testing

Since user tests have multiple moving parts, we’ve created a handy step-by-step task checklist so you know exactly how to conduct user testing.

Simply download the PDF version of our checklist here and share it with your team.

Define your objective: what exactly are you hoping to learn from your users? Is it how they navigate your landing page or why they’re abandoning carts? Get crystal clear.

Identify your target audience: who are your users? What are their demographics? What’s their experience with your product?

Develop tasks and scenarios: create tasks and scenarios that reflect real situations your users might encounter when interacting with your product.

Recruit participants: identify potential users and recruit them using survey or interview tools. Consider using a user testing platform to find participants who match your target audience.

Select your testing tool: find one that fits within your budget while also meeting your goals.

Prepare materials and equipment: prepare any materials or equipment, such as survey questions, consent forms, or recording equipment.

Perform user testing sessions and document the results: follow your test plan and document any issues you find.

Analyze the data: review the data and look for patterns or trends. You could use a spreadsheet to organize and analyze your data or let Contentsquare do it for you.

Share your insights: create a summary of your findings and share them with your team. Use your user testing results to improve your product or service.

Iterate and retest: adapt your product based on user feedback, then test again. Celebrate your successes and use what you've learned to continue to improve your product.

Make user testing an integral part of your workflow

User tests strengthen your relationship with your audience in multiple ways: you understand what they like (the quantitative) and why they like it (the qualitative).

But user testing is not a done-once-dusted-forever kind of exercise. As your users evolve, so do their needs. So make user tests a cyclical part of your product optimization process, and watch your product stickiness, customer satisfaction, and retention soar.

FAQs about user testing examples and checklists:

Studying successful user testing examples helps you understand different use cases for user testing methods while setting expectations for results.

![[Visual] Contentsquare's Content Team](http://images.ctfassets.net/gwbpo1m641r7/3IVEUbRzFIoC9mf5EJ2qHY/f25ccd2131dfd63f5c63b5b92cc4ba20/Copy_of_Copy_of_BLOG-icp-8117438.jpeg?w=1920&q=100&fit=fill&fm=avif)