When you want to optimize your landing page, A/B testing is the most practical way to evaluate your ideas for improvement. This guide shows you how to perform A/B tests, step by step—and learn a whole lot about your users in the process.

Landing page optimization helps you drive conversions, increase revenue, and decrease your cost-per-acquisition. But simply trying to ‘A/B test everything’ without a strategy in place takes up too much valuable time—and it doesn’t deliver results.

Instead, base your A/B tests on a carefully crafted plan, using this clear and repeatable 6-step process.

How to do landing page A/B testing in 6 steps

Follow this sequence for each page you want to test, keeping logs of your decisions so that colleagues can learn from your tests, too.

1. Set clear goals

Start by deciding what you want to achieve with your landing page A/B testing and how it fits in with your business goals.

Why do you want to test? You might want to test because your page is performing below benchmarks, or because you’ve found an indication that users aren’t having a good experience. It’s also totally acceptable to test your page just because you want better results, but there’s a limit to how much you can optimize any one page.

What’s the primary metric you want to improve? Usually on a landing page, you’ll want to improve your key conversion, like ‘Add to cart’ or ‘Download now’ clicks. But depending on your reason for testing, you might want to improve another metric, like bounce rate or first clicks on your form.

When setting goals, it’s also worth determining what improvement you would be happy with. Otherwise, your page testing could go on indefinitely, while other pages need more attention.

Lastly, consider that A/B testing is only effective if your site has sufficient traffic for statistically significant results. Use an A/B test calculator to determine the sample size and test duration you’ll need to get reliable insights.

2. Gather data

There are countless things you could change on your landing page (we’ll look at some of them in a moment).

So, how do you decide which ones to implement? Randomly changing and testing page elements is not the answer: relying on guesswork is an inefficient way to optimize your page—and even if you get lucky with a test, you won’t know why users preferred one variant over another.

Instead, gather data about user perspectives and behaviors to inform your strategy:

Use your preferred analytics tool to check key metrics on the page

For instance, looking at the ‘Time on Page’ metric in Google Analytics 4 tells you if users actually consume the content on your page, or land on it and quickly leave. You can also compare other metrics—like your conversion rate—to see if the page is performing above or below your site’s benchmarks. These metrics help you understand what’s happening on the page before you look at other data to understand why.

Learn how users are behaving on the page

This is where an experience intelligence platform like Contentsquare (hi!) comes in handy. For instance, use Zone-Based Heatmaps to see where users tend to click, move, or scroll to. Do they stop scrolling before reaching important areas, or click on non-clickable page elements? These close-up insights reveal if your page is intuitive and helpful to users.

Dive deep into users’ perspectives and experiences

For any conversion optimization project, you need a deep understanding of your users’ needs and perspectives. In particular, you need to get familiar with

Drivers that bring them to your website

Barriers that make them leave

Hooks that persuade them to convert

If you haven’t already carried out this kind of research, it’s a good idea to speak to your users about the products in your campaign. Recruit participants from your target audience and conduct user interviews.

Back this up with surveys asking focused questions around users’ drivers, barriers, and hooks. You can embed these surveys across your website—but ideally not on your campaign landing pages, where they could distract users and reduce conversions.

Pro tip: use tools like heatmaps and Session Replay to map the user journey and identify where to place your surveys.

Heatmaps show you which areas of your site get the most attention, whereas replays reveal where users get frustrated and drop off, helping you determine on which pages to deploy exit surveys.

Use surveys to get the feedback you need to improve conversions on your landing pages and more

3. Create a testing hypothesis

Now that you’ve gathered a range of data, you hopefully have an idea of user behavior and perceptions related to your page. It’s time to create a hypothesis for what might improve the page.

Useful hypotheses are testable and based on reliable data or observations. They involve proposing a solution and predicting an outcome, with reasoning based on your understanding of your users. For example, they might look like this

“If we remove the buttons that are not relevant to users’ primary goal on this page, conversions will increase because users will have fewer distractions from the main CTA.”

Your hypothesis should be based on the insights you’ve gained in the previous steps. In other words, you should be somewhat confident that you know how to improve the page—your A/B test is the final step in the conversion rate optimization process.

You’ll usually end up with one or more elements that you want to add, change, move, or scrap altogether.

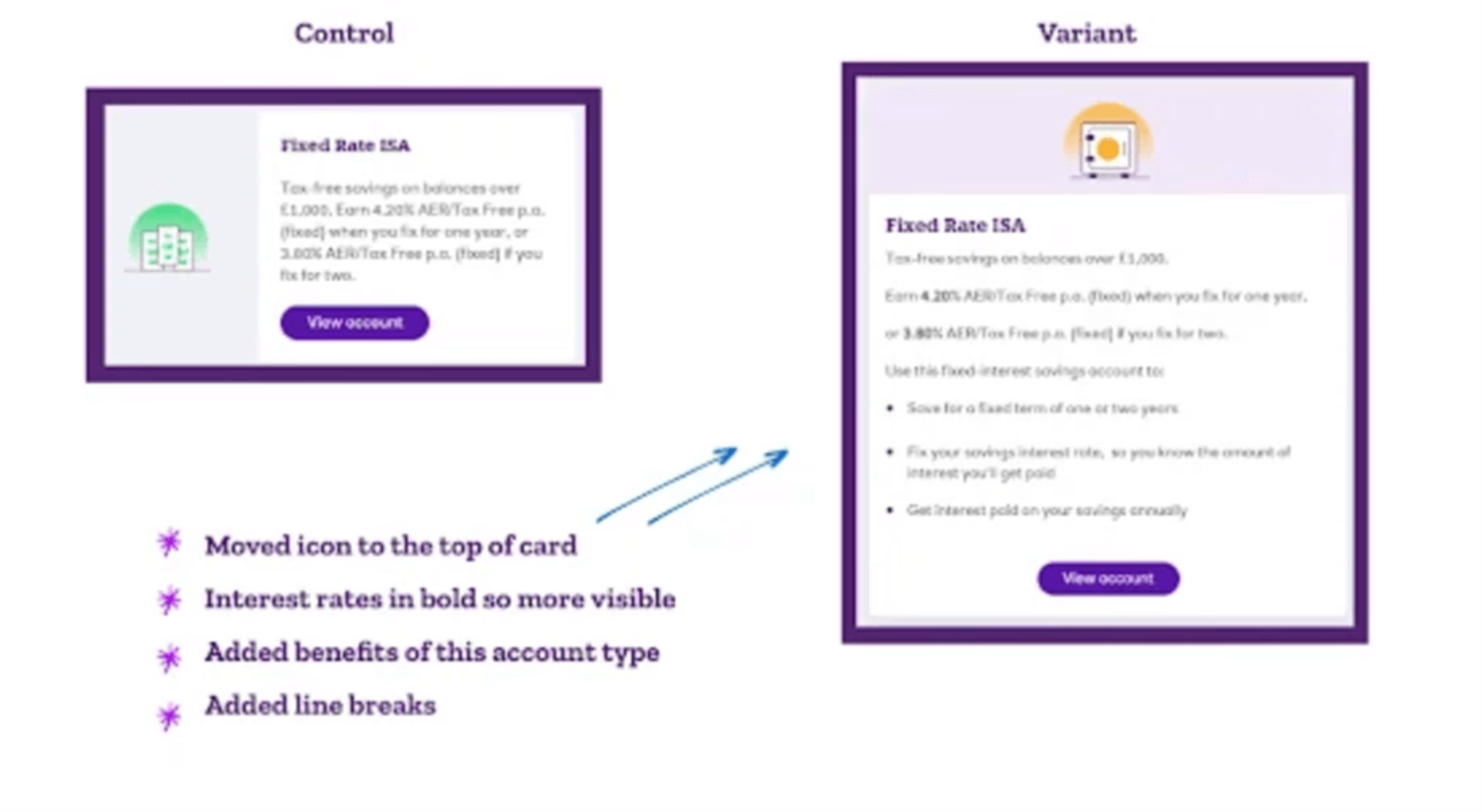

Contentsquare 🤝 NatWest: creating a winning hypothesis based on user engagement

U.K. retail bank NatWest used our Experience Analytics platform to analyze its mobile app savings hub page. They found the page had a high exit rate, with users scrolling far down the page before leaving. Since high volumes of users came to NatWest through this page, it was important to remedy the problem quickly.

The team hypothesized that the hub page design didn’t have enough key information that users were looking for. To test this, they tried a new version of the page that featured a more concise and scannable Fixed Rate ISA card.

NatWest’s A/B test on the mobile app savings hub page

By using Contentsquare’s side-by-side comparison of the original and new versions, they saw a big improvement in the number of users completing their applications.

Thanks to this simple A/B test and the insights they gained, NatWest was able to make a real difference in their customer journey.

👉 Read the full NatWest case study

4. Prepare the new landing page elements you want to test

Usually, you’ll want to change some of the landing page elements listed below—but theoretically, anything on your page could affect conversions.

⚠️ Remember, the more elements you change in any one test, the less you’ll understand which change impacted test results.

Headlines

An effective headline immediately hooks visitors into reading on by making a promise or solving a pain they care about. What’s more, it sets the context for the rest of the page, presenting a narrative that everything else flows from.

This landing page headline from Planable makes a clear promise about what the product delivers

Test your headlines if

Your research shows that your current headline doesn’t resonate with users—or isn’t connected with their motivations and pain points

Your analytics reveal low time on page, suggesting that the headline doesn't ‘hook’ users into reading on

Subheadings

Users will often scan a page rather than reading every word top to bottom. That means your subheadings need to tell the most important parts of the story and highlight key sales arguments.

If your subheadings aren’t clear or persuasive, consider testing new versions in line with your testing hypothesis.

"

Subheadings are almost as important as your main headline. If your landing page has placeholder subheadings that say something like ‘Features’ or ‘Why us’, consider testing subheadings that work harder to sell your product. For example, a subheading that just says ‘Features’ could become ‘Automate your workflow from start to finish’. Every subheading should either convey important information—like a key benefit—or entice the user to read more.

Jon Evans

Copywriter, Electric Copy

Body copy

Your body copy is responsible for explaining the finer details of your offer to users who are invested in learning more. While marketers tend to A/B test body copy less frequently than other elements, the right sales arguments can improve your conversions. Consider changing

Length

Readability (e.g. changing a long sentence into bullet-point format)

Focus (i.e. the feature your body copy talks about)

Images and video

Images and video are an effective way to grab your users’ attention and convey important information about your product.

If your data suggests visitors are not staying on the page for long, add a video near the top of the page to pique their interest

If your page is mostly text, consider adding images that would help users scan and understand the page

If your page already has images, consider changing them—heatmaps can help you understand how users interact with them

🏆 Optimize like a pro

Heatmaps showing that users often click on non-clickable images suggest your audience wants to know more. Consider adding more information on the topic or turning the image into a video in your new page variant.

Pricing and information tables

If your data suggests that users need more logistical information, consider adding a table or making your existing one more prominent. Alternatively, if your research suggests that users are getting distracted, give them the option of displaying a ‘hidden’ table by clicking a button.

Ecommerce store Katin hides its size chart behind a link on its product pages

CTA buttons

Your CTA buttons might be only a small part of your page, but they’re arguably your most important conversion elements. Consider testing their

Color and shape

Position on page

Wording

Quantity (multiple different CTAs vs. one repeated CTA)

HubSpot uses an attention-grabbing orange color and simple wording on its CTA buttons

Trust factors

Client logos, awards, and testimonials all affect whether visitors trust your page. But too many can also make your page cluttered—so if your data suggests that users find them distracting or overwhelming, consider narrowing down your selection.

Forms

There are countless conversion studies showing that the UX design of forms makes a significant impact on conversions. Reducing any friction in the user’s journey when finding or filling in the form can go a long way. Consider testing

Number of form fields

Field labels and label positioning

Trust signals (e.g. security and credit card logos)

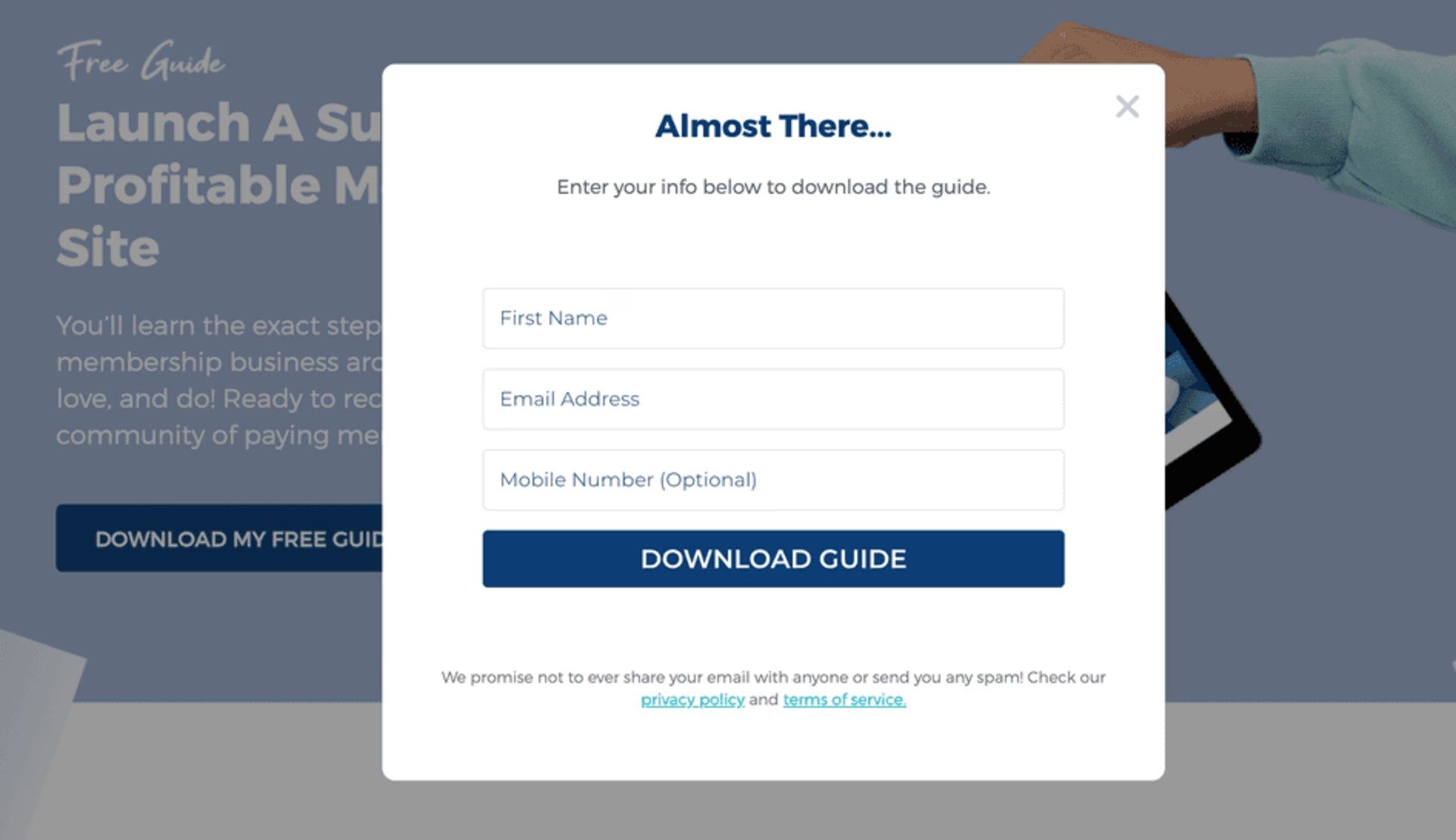

On Membership Workshop, the form is hidden until users click the page’s main CTA button

Page structure

Even when your page has all the right ‘ingredients’, you might want to A/B test a new variant that presents them in a different order. Consider changing

Sequencing of key sales arguments (such as feature descriptions)

Positioning of forms and CTA buttons

Positioning of special offer information

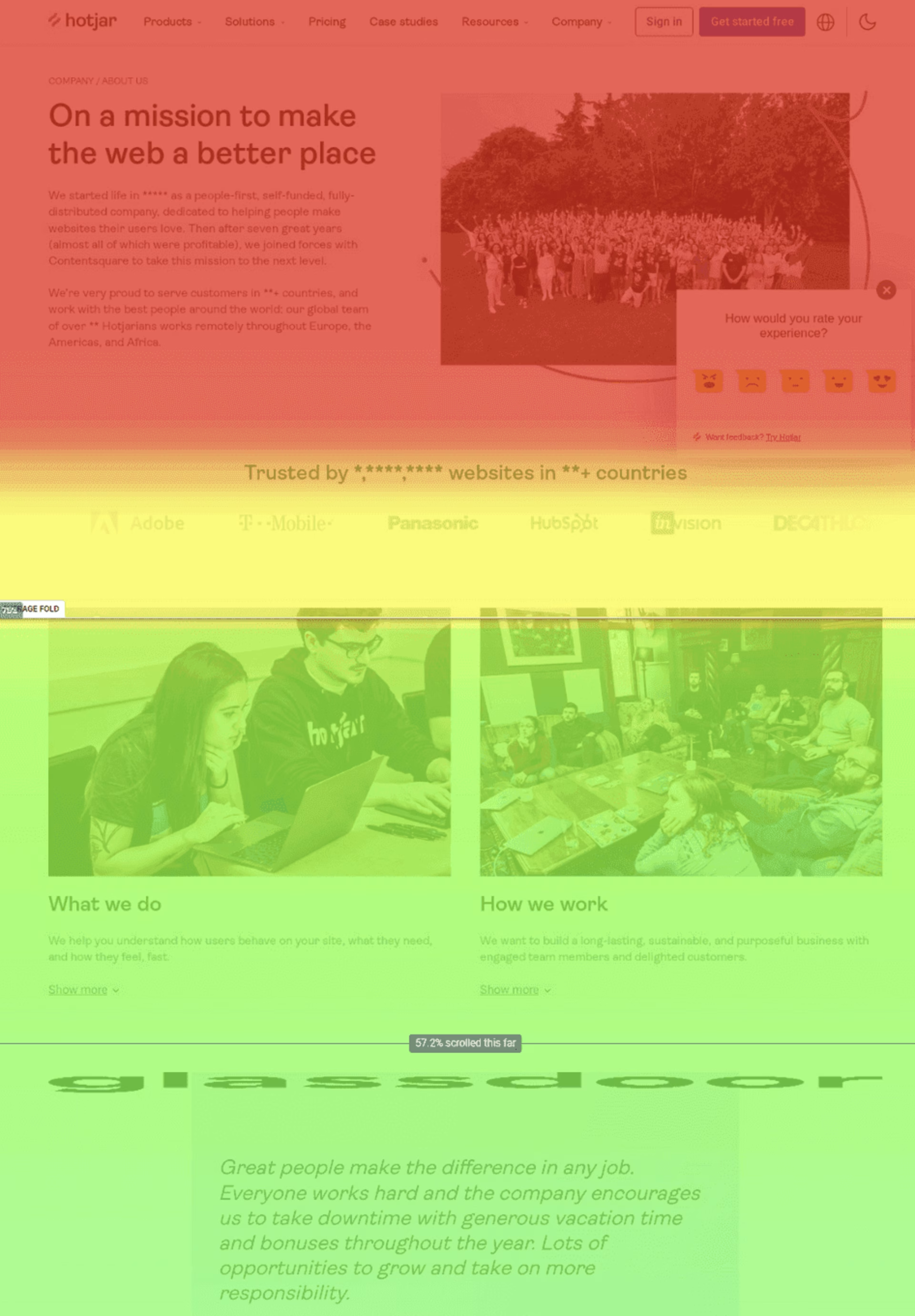

By looking at scroll maps, you’ll get an instant overview of how far users typically scroll—and find out if they see key page elements.

A Hotjar, part of the Contentsquare group, scroll map shows ‘cooler’ colors which represent fewer users scrolling down to that part of the page

5. Set up your test

With your new page elements ready, you can now create a ‘B’ variant in your landing page builder. You could also create multiple page variants if you have enough traffic for multivariate testing.

Depending on your tech stack, you’ll likely want to connect an A/B testing tool, like AB Tasty or Optimizely. These tools make it easy to ‘split’ your traffic and measure the performance of each page variant.

Finally, set up an all-in-one digital experience suite like Contentsquare. With capabilities like heatmaps and replays, you can take a closer look at what users are doing on each page variant, bringing a qualitative understanding to your quantitative data.

To ensure a statistically significant test, you’ll need to let it run for enough time. If your test runs too short, you won’t capture enough data, and your tests may have too much ‘noise’ for the results to be meaningful.

(💡 If in doubt, check the recommendation from the A/B testing calculator you used in step one.)

Pro tip: integrate your A/B testing data with Contentsquare.

Learn why one page variant outperformed another by integrating Contentsquare with testing tools.

For instance, using our AB Tasty integration adds a visual layer of insights to your experiments, so you can understand why your tests win or lose and iterate on successes.

Contentsquare's cutting-edge analytics provide deep and meaningful insights into user behavior, while AB Tasty empowers you to act on those insights in real-time. Together, we offer a 360-degree view of your user journey.

Contentsquare’s side-by-side Page Zoning works together with AB Tasty’s robust experimentation and personalization features to compare 2 different test variations, devices, metrics, or customer segment.

This gives you an easy, intuitive visual view to understand why and how visitors acted differently from the default.

Contentsquare’s side-by-side analysis in a single view is a game-changer for A/B testing

6. Review your test data

Check your A/B testing tool to learn which of your page variants ‘won’ in terms of achieving higher conversion rates (or whatever key metric you’re trying to improve).

If your new version beats the original by a significant margin, you might want to replace your original variant with it and move on to optimizing other landing pages. Alternatively, keep the winning variant as your ‘A’ variant, and run another test with a new ‘B’ variant.

Remember: if you’re going to keep running A/B tests, it’s important to understand why your new variant won. This is where analyzing your qualitative data helps you get a full picture of the results.

For example

If you changed your CTA, did the new version get more or less attention in the page variant?

If you moved your CTA to a new position, do your heatmaps indicate that users are scrolling to or engaging with the new location?

What happens if your test isn’t successful?

If your ‘B’ variant doesn’t beat the ‘A’ variant, it doesn’t mean your test was a failure. Your hypothesis was proven wrong—so you still learned a valuable lesson about what your users respond to (or not).

So, to get the most out of each test

Keep records of what you changed in each variant, and what the results were. This way, other members of your team can refer to past tests in future.

Analyze your page metrics and user behavior data to learn why your page didn’t perform better**.** Do replays suggest that users were confused or distracted by a new page element? Do heatmaps show users weren’t scrolling down to your new CTA button?

In many cases, the answers are waiting in your data—and doing a little ‘detective work’ can be what makes your next A/B test more successful.

Put your users at the center of your A/B testing process

A/B testing is an exciting process, and there’s nothing better than seeing your new page variant smash your previous conversion rates. But it’s easy to see A/B testing as the end in itself, and lose focus on the real goal: creating better user experiences.

After all, what your users want isn’t a perfectly optimized landing page—but a frictionless way to get the products and services they need. So when you’re optimizing your landing pages, start (and finish) by learning about your users. The more you understand their drivers, barriers, and hooks, the better equipped you are to make the right A/B testing decisions.

FAQs about landing page A/B testing

When does split testing end?

Split testing or A/B testing usually ends after you have gathered enough data to draw a statistically significant conclusion. That means you’ve had enough visitors to be sure that the difference between your test variants isn’t down to chance.

If your website has high volumes of traffic, you could potentially run a test in a few days—but usually it will be several weeks, or even months. Every website is different, so we recommend using an A/B testing calculator to work out how long your test should last.

What are the best practices for A/B testing a landing page to improve conversion rates?

To improve your landing page conversion rates, we recommend that you

Set clear goals for testing

Gather data that helps you understand user drivers, barriers, and hooks

Create a test hypothesis

Prepare your new page elements

Set up your test with relevant tools

Review your test data (use conversion data in combination with user behavior data to understand what users did and why)