To build a website your customers love, you need to understand which features give them a thrill—and which put them to sleep. A/B testing takes the guesswork out of engaging with users, providing real insights to help you identify and optimize your website’s best features.

When you run A/B tests regularly, you can measure your team’s theories against real-world data and develop better products for your customers—a win-win situation!

This guide covers the fundamentals of A/B testing on websites and why they're important for you, your business, and the people who matter most: your users. As you go through each chapter, you’ll be able to develop, evaluate, and optimize your A/B tests, and make sure every change produces positive results.

What is A/B testing?

A/B testing is a research method that runs two versions of a website, app, product, or feature to determine which performs the best. It’s a component of conversion rate optimization (CRO) that you can use to gather both qualitative and quantitative user insights.

An A/B or split test is essentially an experiment where two test variants are shown to users at random, and statistical analysis is used to determine which variation performs better for a given conversion goal.

In its simplest form, an A/B test validates (or invalidates) ideas and hypotheses. Running this type of test lets you ask focused questions about changes to your website, and then collect data about the impact of those changes.

By testing your assumptions with real users, you save a ton of time and energy that would otherwise have been spent on iterations and optimizations.

What A/B testing won’t show you

An A/B test compares the performance of two items or variations against one another. On its own, A/B testing only shows you which variation is winning, not why.

To design better experiments and impactful website changes, you need to understand the deeper motivations behind user behavior and avoid ineffective solutions like guesswork, chance, or stakeholder opinions.

Combining test results with qualitative insights—the kind you get from heatmap, session replay, survey, and feedback tools—is a ‘best of both worlds’ approach that allows you and your team to optimize your website for user experience and business goals.

Why is A/B testing important?

A/B testing is a systematic method used to determine what works (and what doesn’t) for any given asset on your website—a landing page, a logo, a color choice, a feature update, the entire user interface (UI), or your product messaging.

Whether you're using split tests in marketing campaigns and advertising or A/B testing for product management and development, a structured testing program makes your optimization efforts more effective by pinpointing crucial problem areas that need improvement.

Through this process, your website achieves its intended purpose—to drive maximum engagement or revenue—along with other valuable benefits

A better user experience: performing A/B tests highlights the features and elements that have the most impact on user experience (UX). This knowledge helps your team develop ideas and make customer-centric, data-informed UX design decisions.

Improved customer satisfaction: gaining insights into what users enjoy helps you deliver a website and product that customers love. Whatever their goal is on your site, A/B testing helps address users' pain points and reduce friction for a more satisfying experience.

Low-risk optimizations: A/B testing lets you target your resources for maximum output with minimal modifications. Launching an A/B test can help you understand whether a new change you’re suggesting will please your target audience, making a positive outcome more certain.

Increased user engagement: A/B testing removes the guesswork from CRO by directly engaging and involving users in the decision process. It lets the users decide—they vote with their actions, clicks, and conversions.

Less reliance on guesswork: A/B tests challenge assumptions and help businesses make decisions based on data rather than on gut feelings—about everything from email subject lines and website information architecture to a complete website redesign

Increased conversion rates: by learning how and why certain elements of the experience impact user behavior, A/B testing lets you maximize your existing traffic and helps you increase conversions

Optimized website performance: A/B testing lets you make minor, incremental changes that significantly impact website performance—like testing APIs, microservices, clusters, and architecture designs to improve reliability

Validated decision-making: use A/B testing to validate the impact of new features, performance improvements, or backend changes before rolling them out to your users

Keep in mind: A/B testing starts with an idea or hypothesis based on user insights.

Contentsquare is an Experience Intelligence Platform designed to give you actionable user data. The platform automatically captures behavioral and performance data across web and apps, drawing insights from 4 analytics domains, for a complete understanding of customers and their journeys.

These 4 domains help you collect user insights and understand why winning A/B tests succeed, so you can double down on what works

Digital Experience Analytics (DXA): analyze how users interact with your website, from clicks to browsing patterns, to improve user journeys

Digital Experience Monitoring (DEM): continuously monitor site performance and user behavior to identify technical issues and optimize user experiences

Product Analytics (PA): track and measure the performance of digital products, understanding how users engage with specific features

Voice of Customer (VoC): collect direct user feedback through surveys and widgets to gain insights into user satisfaction and preferences

For example, using the platform you can:

Discover user journeys and compare successful vs. unsuccessful sessions side-by-side

Understand in-page behavior and what makes customers click vs. what leaves them cold

With endless integrations and capabilities, Contentsquare helps you spot common areas of friction in the user experience, get ideas for improvements, and find out if your fixes are working—and why or why not.

Contentsquare gives you a more informed perspective on which next A/B tests are most likely to move the needle for your desired conversion goals

How do you run an A/B test?

Running A/B tests is more than just changing a few colors or moving a few buttons—it’s a process that starts with measuring what’s happening on your website, finding things to improve and build on, testing improvements, and learning what worked for your customers.

Check out our comprehensive guide on how to do A/B testing for your website—everything on developing, evaluating, and optimizing A/B tests. Until then, here’s a quick framework you can use to start running tests:

Developing and setting up A/B tests

From getting a clear understanding of user behavior to connecting problems to opportunities for improvement, the A/B testing process for websites is all about your users.

🔍 Researching ideas

A/B testing focuses on experimenting with theories that evolved by studying your market and your customers. Both quantitative and qualitative research help you prepare for the next step in the process: making actionable observations and generating ideas.

Collect data on your website's performance—everything from how many users are coming onto the site and the various conversion goals of different pages to customer satisfaction scores and UX insights.

Use heatmaps to determine where users spend the most time on your pages and analyze their scrolling behavior. Session replay tools also help at this stage by collecting visitor behavior data, which helps identify gaps in the user journey. This can also help you discover problem areas on your website.

![[Visual] Session Replay | Error Details](http://images.ctfassets.net/gwbpo1m641r7/5DZbkzo3qFEHFqYFZ7eKsE/755984f322cabf5a7554ccbf5c244e6c/Screenshot_2024-11-04_at_21.10.30.png?w=3840&q=100&fit=fill&fm=avif)

An example of a Contentsquare Session Replay

💡 Identifying goals and hypotheses

Before you A/B test anything, you need to identify your conversion goals—the metrics that determine whether or not the variation is more successful than the original version or baseline.

Goals can be anything from clicking a button or link to purchasing a product. Set up your experiment by deciding what variables to compare and how you'll measure their impact.

Once you've identified a goal, you can begin generating evidence-based hypotheses. These identify what aspects of the page or user experience you’d like to optimize and how you think making a change will affect the performance.

📊 Executing the test

Remember, you started the A/B testing process by making a hypothesis about your website users. Now it’s time to launch your test and gather statistical evidence to accept or reject that claim.

Create a variation based on your hypothesis of what might work from a UX perspective, and A/B test it against the existing version. Use A/B testing software to make the desired changes to an element of your website. This might be changing a CTA button color, swapping the order of elements on the page template, hiding navigation elements, or something entirely custom.

The goal is to gather data showing whether your test hypothesis was correct, incorrect, or inconclusive. It can take a while to achieve a satisfactory result, depending on how big your sample size is.

Important note: ignoring statistical significance is a common A/B testing mistake. Good experiment results will tell you when the results are statistically significant and trustworthy. Otherwise, it would be hard to know if your change truly made an impact.

How to generate A/B testing ideas and hypotheses

Identify where visitors leave your website: use traditional analytics tools to see where people exit your website and complement those insights with a conversion funnels tool

Collect customer feedback: use on-page surveys and feedback widgets to get open-ended feedback about what users really think about your website

Run usability testing: usability testing tools give you insight into how real people use your website and allow you to get their direct feedback about the issues they encounter and the solutions they'd like to see

Study session replays: observe individual users as they make their way through your website, so you can see the experience from their perspective and notice what they do right before they exit

![[Visual] Exit-Intent- Survey](http://images.ctfassets.net/gwbpo1m641r7/1UqasWRBnczjIUsx8wDIMB/149e80ca4b764400d3295ddb4c9c254b/Feedback_Widget__1_.png?w=1920&q=100&fit=fill&fm=avif)

Contentsquare surveys are a way to engage your users in conversation directly

Tracking and evaluating A/B tests

Once your experiment is complete, it’s time to analyze your results. Your A/B testing software will measure data from both versions and present the differences between their performance, indicating how effective the changes were and whether there is a statistically significant improvement.

Analyzing metrics and interpreting results

A/B testing programs live, mature, evolve, and succeed (or fail) through metrics. By measuring the impact of different versions on your metrics, you can ensure that every change to your website produces positive results for your business and your customers.

To measure the impact of your experiments, start by tracking these A/B testing metrics:

Conversion rate: the number of visitors that take a desired action—like completing a purchase or filling out a form

Click-through rate (CTR): the number of times a user clicks on a link or call to action (CTA) divided by the number of times the element is viewed

Abandonment rate: the percentage of tasks on your website that the customer abandons before being completed—for an ecommerce website, this can mean users purchasing an item in their shopping cart

Retention rate: the percentage of users who return to the same page or website after a certain period

Bounce rate: the percentage of visitors to a website who navigate away from the site after viewing only one page

Scroll depth: the percentage of the page a visitor has seen. For example, if the average scroll depth is 50%, it means that, on average, your website visitors scroll far enough to have seen half of the content on the page.

Time on site: the average amount of time a user spends on your website before leaving

Average order value (AOV): the average amount of money spent by a customer on a single purchase

Customer satisfaction score (CSAT): how satisfied customers are with your company's products or services

Read our full chapter on A/B testing metrics to make sure you collect the most essential data to analyze your experiment.

How to understand the ‘why’ behind your A/B testing results

In A/B testing, quantitative metrics are essential for identifying the best-performing variation of a site or product. But even the best-designed A/B tests can’t pinpoint the exact reasons why one variation succeeds over another. For example, why does one version of a CTA work better than the other, and how can you replicate its success across your website?

Quantitative and qualitative A/B testing metrics should work in harmony: quantitative data answers the what, and qualitative data tells you why.

Capabilities like Contentsquare’s Zone-Based Heatmaps, Session Replay, Surveys, and Feedback Collection integrate with traditional A/B testing tools to help you better understand variant performance.

During a test, you can track user behavior and collect insights to understand how each variation affects the user experience on your website. Then, use these qualitative tools to explore more in-depth ideas, learn about common user pain points, or discover which product features are most interesting to them:

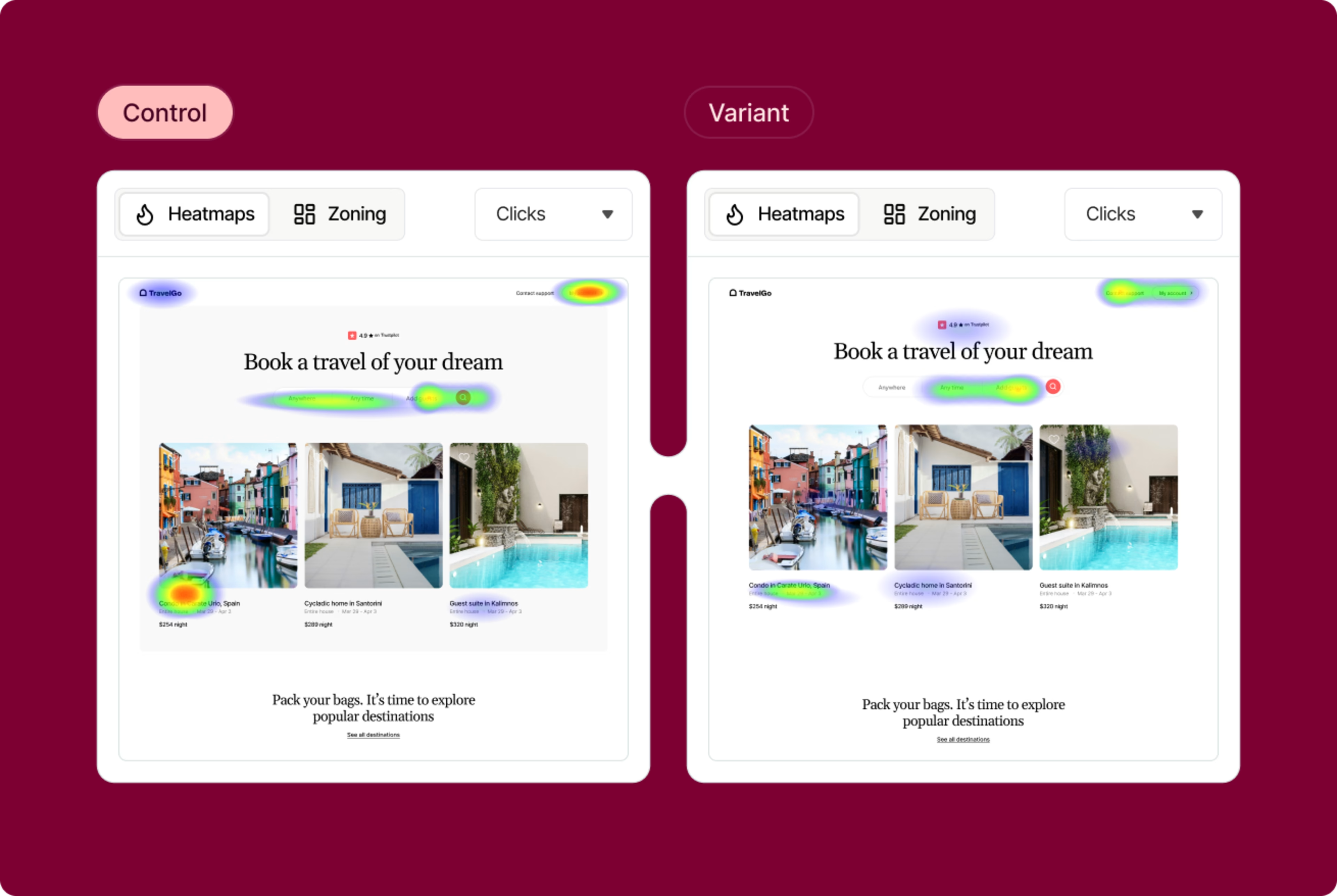

Session replays and heatmaps let you visualize how users react to different variations of a specific page, feature, or iteration. What are visitors drawn to, and what confuses them?

Surveys take the guesswork out of understanding user behavior during A/B testing by letting users tell you exactly what they need from or think about each variation

Feedback widgets give you instant visual insights from real users. They can rate their experience with a variant on a scale, provide context and details, and even screenshot a specific page element before and after you make a change.

![[Visual] Feedback-Button rate your experience](http://images.ctfassets.net/gwbpo1m641r7/1jtKOa90vakvficfgrrXTz/81dc0f40dff6d9257d91872c4efed9cb/Feedback_button__1_.png?w=3840&q=100&fit=fill&fm=avif)

The Contentsquare Feedback Collection widget, powered by Hotjar

Next steps to A/B testing

Like with any CRO process, you’re not only looking for the winning A/B test variation—you need actionable learnings that you can apply to your website to improve the customer experience.

If your variation is a winner, congratulations! 🎉 Deploy it, draw conclusions based on the data, and translate them into practical insights that you can use to improve your website. See if you can apply learnings from the experiment on other pages of your site to continue iterating and enhancing your statistically significant results.

Remember that inconclusive and 'failed' tests give you a clear idea of what doesn't work for your users. There are no losses in A/B testing—only learning.

FAQs about A/B testing

A/B testing, also known as split URL testing, is the process of running two different versions of the same website in a controlled experiment to see which one resonates with and converts the most users. A/B tests provide valuable insights into user behavior, allowing you to identify which elements impact your conversion rates the most, so you can make changes that drive more people to take a desired action on your product or site.

![[Visual] Contentsquare's Content Team](http://images.ctfassets.net/gwbpo1m641r7/3IVEUbRzFIoC9mf5EJ2qHY/f25ccd2131dfd63f5c63b5b92cc4ba20/Copy_of_Copy_of_BLOG-icp-8117438.jpeg?w=1920&q=100&fit=fill&fm=avif)